How to build a GraphQL Gateway with Spring Boot and Kotlin

With Spring Boot + Kotlin + Coroutines + GraphQL-java-kickstart, you can build a GraphQL Gateway with a minimum of boilerplate.

Up and Running

The code is available here:

Run the server like this:

git clone https://github.com/jmfayard/spring-playground

cd spring-playground/graphql-gateway

./gradlew bootRun

Open GraphiQL at http://localhost:8080/

Animal facts

With this project up and running, you can fetch animal facts using a GraphQL Query.

Enter this query:

query {

dog {

fact

length

latency

}

cat {

fact

length

latency

}

}

Run the query, and you will see something like this:

If you are new to the GraphQL, read this introduction from @methodcoder, I will wait.

https://medium.com/media/63ea00cc1e27ec6be082d27c75f797cc/href

Cat facts and dog facts

Where do the animal facts come from?

The server knows about two REST APIs.

The first one is about cat facts:

$ http get https://catfact.ninja/fact

{

"fact": "Isaac Newton invented the cat flap. Newton was experimenting in a pitch-black room. Spithead, one of his cats, kept opening the door and wrecking his experiment. The cat flap kept both Newton and Spithead happy.",

"length": 211

}

And the second one is about dog facts:

$ http get https://some-random-api.ml/facts/dog

{

"fact": "A large breed dog's resting heart beats between 60 and 100 times per minute, and a small dog breed's heart beats between 100-140. Comparatively, a resting human heart beats 60-100 times per minute."

}

By building a simple gateway, we take on complexity so that the front-end developers have one less thing to worry about:

- We take care of calling the multiple endpoints and combining them, becoming a backend-for-frontend.

- We offer a nice GraphQL schema to the front-end(s).

- We normalize the response format — dog facts have no length attribute, but we can compute it!

- We can potentially reduce the total response time. Without the gateway, the front-end would do two round-trips of let say 300 ms, so 600ms. With the gateway, there is one round-trip of 300 ms and two round-trips between the gateway and the facts server. If those are located on the same network, those could be done in 10 ms each, for a total of 320 ms.

So, how do we build that gateway?

Dependencies

If you start a new project from scratch via https://start.spring.io/, you will need to add those dependencies:

- Spring Webflux

- GraphQL-java

- GraphQL-java-kickstart libraries

Note that I’m using gradle refreshVersions to make it easy to keep the project up-to-date. Therefore, the versions are not defined in the build.gradle files, they are centralized in the versions.properties file. RefreshVersions is bootstrapped like this in settings.gradle.kts:

https://medium.com/media/dbf2e6d4c43feb33b55a03a17f3d2745/href

GraphQL-schema first

GraphQL-java-kickstart uses a schema-first approach.

We first define our schema in resources/graphql/schema.grqphqls :

https://medium.com/media/1291e72dbc48136069a2020704b51c0d/href

Then, we tell Spring where our GraphQLSchema comes from:

https://medium.com/media/b38095cf5b3757ebd8e33dc5602da64a/href

Spring wants at least a GraphQLQueryResolver, the class responsible for implementing GraphQL queries.

We will define one, but keep it empty for now:

@Component

class AnimalsQueryResolver() : GraphQLQueryResolver {

}

GraphQLQueryResolver

If we start our application with ./gradlew bootRun, we will see it fail fast with this error message:

FieldResolverError: No method or field found as defined in schema graphql/schema.graphqls:2

with any of the following signatures

(with or without one of [interface graphql.schema.DataFetchingEnvironment] as the last argument),

in priority order:

dev.jmfayard.factsdemo.AnimalsQueryResolver.cat()

dev.jmfayard.factsdemo.AnimalsQueryResolver.getCat()

dev.jmfayard.factsdemo.AnimalsQueryResolver.cat

The schema, which is the single source of truth, requires something to implement a cat query, but we didn’t have that in the code.

To make Spring happy, we make sure our Query Resolver has the same shape as the GraphQL schema:

https://medium.com/media/0d2edf3a38f5a0fd7dcea24a01eba212/href

Notice that you can directly define a suspending function, without any additional boilerplate, to implement the query.

Run again ./gradlew bootRun and now Spring starts!

We go one step further by forwarding the calls to an AnimalsRepository:

https://medium.com/media/9ee243fede53ce85853e83574d996465/href

How do we implement this repository? We need an HTTP client.

Suspending HTTP calls with ktor-client

We could have used the built-in reactive WebClient that Spring provides, but I wanted to use ktor-client to keep things as simple as possible.

First, we have to add the dependencies for ktor, http and kotlinx-serialization, then configure our client.

See the commit Configure ktor-client, okhttp & kotlinx.serialization

The most interesting part is here:

https://medium.com/media/e7cdcf169aae2a925e61c6317d10900e/href

Simple or non-blocking: why not both?

When I see the code above, I am reminded that I love coroutines.

We get to write code in a simple, direct style like in the old days when we were writing blocking code in a one-thread-per-request model.

Here it’s essential to write non-blocking code: the gateway spends most of its time waiting for the two other servers to answer.

Code written using some kind of promise or reactive streams is therefore clearly more efficient than blocking code.

But those require you to “think in reactive streams” and make your code looks different indeed.

With coroutines, we get the efficiency and our code is as simple as it gets.

Resilience via a Circuit Breaker

We have a gateway, but it’s a bad gateway.

More precisely, it’s as bad as the worst of the servers it depends on to do its job.

If one server throws an error systematically or gets v e r y s l o w, our gateway follows blindly.

We don’t want the same error to reoccur constantly, and we want to handle the error quickly without waiting for the TCP timeout.

We can make our gateway more resilient by using a circuit breaker.

Resilience4j provides such a circuit breaker implementation.

We first add and configure the library.

See the commit: add a circuit breaker powered by resilience4j.

The usage is as simple as it gets:

https://medium.com/media/a85ff23fcfeea577b3a619daf5817efa/href

I want to learn more

See spring-playground/graphql-gateway

The talk that inspired this article: KotlinFest2019「Future of Jira Software powered by Kotlin」 #kotlinfest — YouTube

https://medium.com/media/79fe002f558d781672934e302da05b1a/href

Documentation of the libraries used in this project:

- Getting started with a Ktor client | Ktor

- About GraphQL Spring Boot — GraphQL Java Kickstart

- About GraphQL Java Tools — GraphQL Java Kickstart

- graphql-java/graphql-java: GraphQL Java implementation

- Resilience4j

Another approach: Creating a Reactive GraphQL Server with Spring Boot and Kotlin

If you want to contact me, there is a standing invitation at https://jmfayard.dev/contact/.

Click 👏 to say “thanks!” and help others find this article.

To be up-to-date with great news on Kt. Academy, subscribe to the newsletter, observe Twitter and follow us on Medium.

If you need a Kotlin workshop, check how we can help you: kt.academy.

How to build a GraphQL Gateway with Spring Boot and Kotlin was originally published in Kt. Academy on Medium, where people are continuing the conversation by highlighting and responding to this story.

Serverless Kotlin with OpenFaaS

Serverless Kotlin on OpenFaaS

With this article, my goal is to demonstrate how Serverless Kotlin can look like by introducing you to one of the coolest Serverless platforms: OpenFaaS. OpenFaaS is an open-source, community-owned project that you may use to run your functions and microservices on any public or private cloud. You can run your Docker image on OpenFaaS, which runs and scales it for you. As a result, you are free to choose any programming language as long as it can be packaged into a Docker image. Throughout this post, we want to learn about the concepts behind Serverless and Function as a Service (FaaS), and how we can deploy Serverless Kotlin functions to OpenFaaS.

Serverless and Function as a Service

Serverless Computing

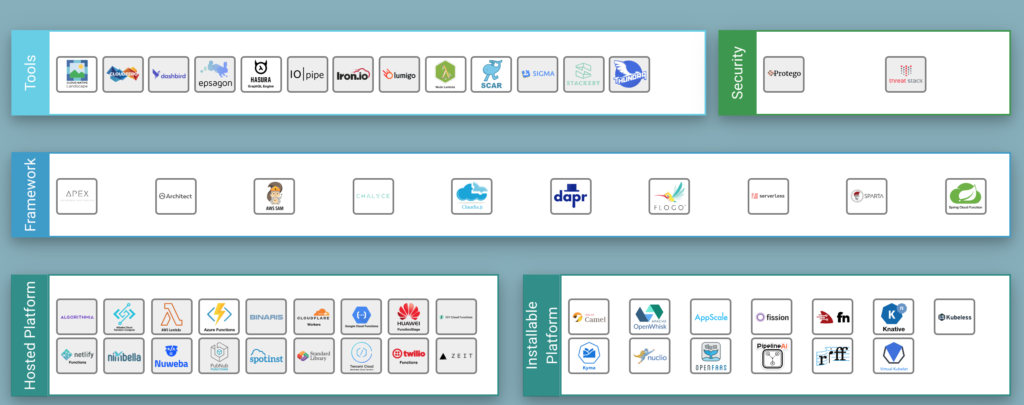

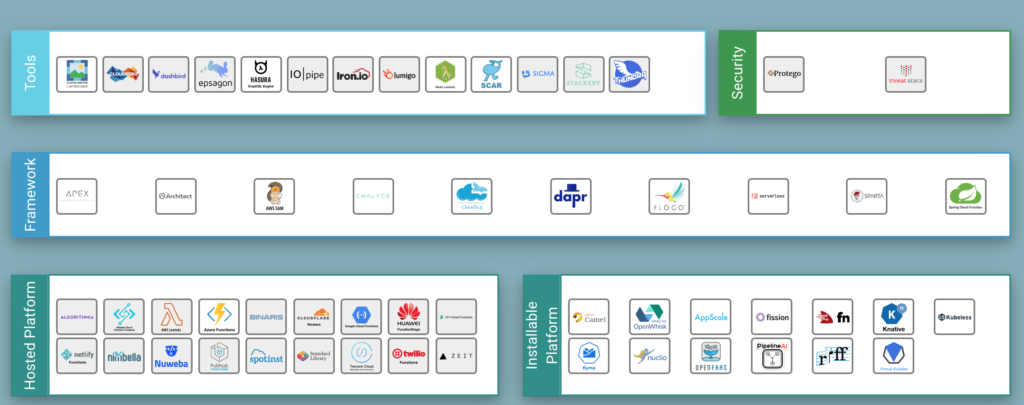

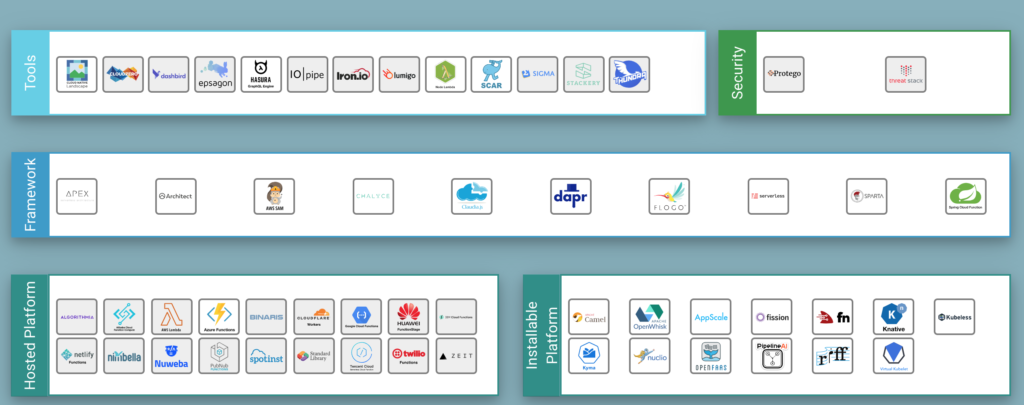

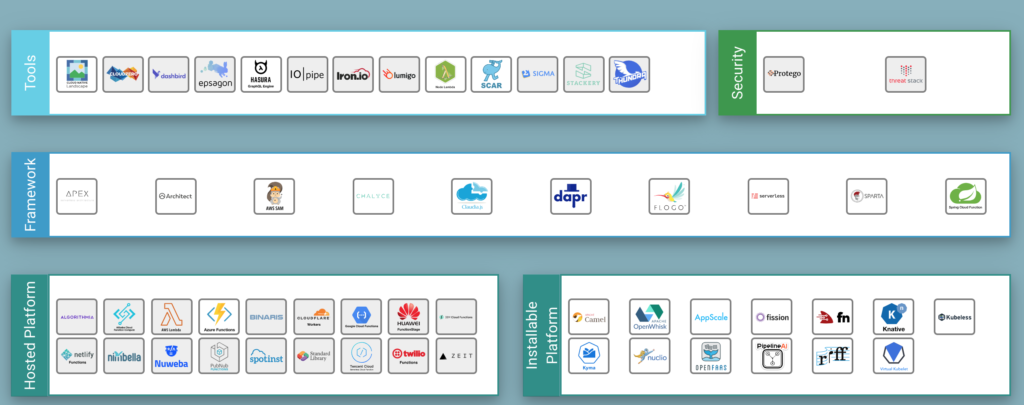

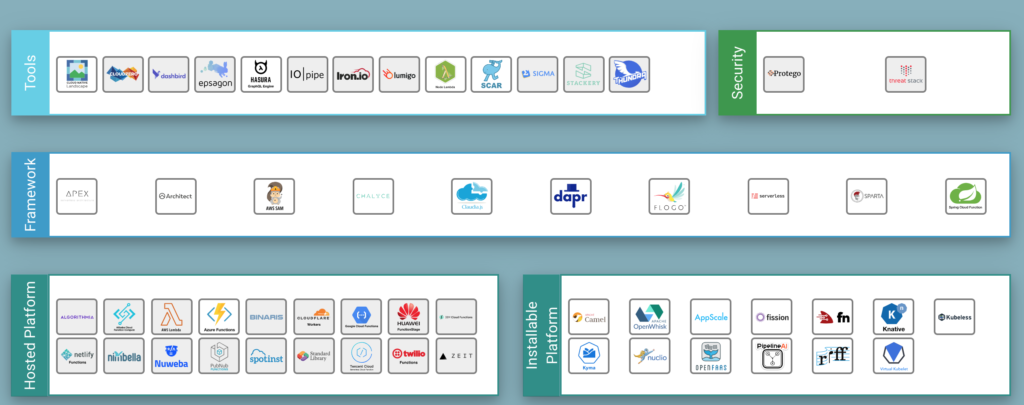

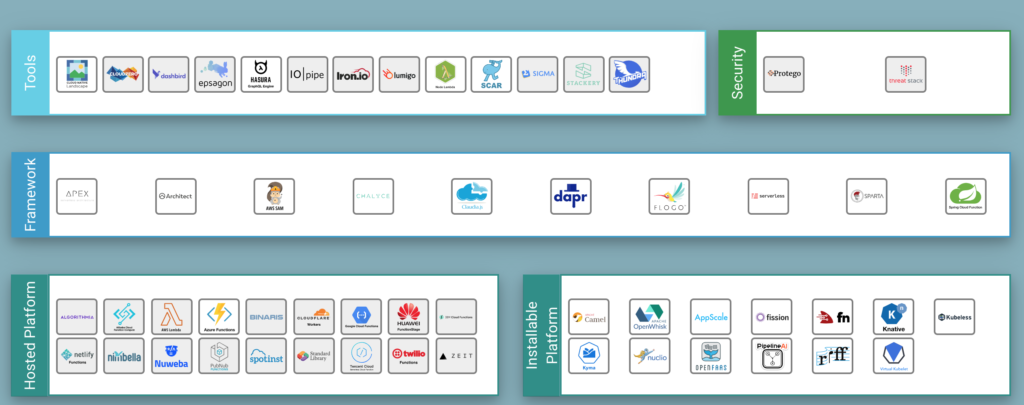

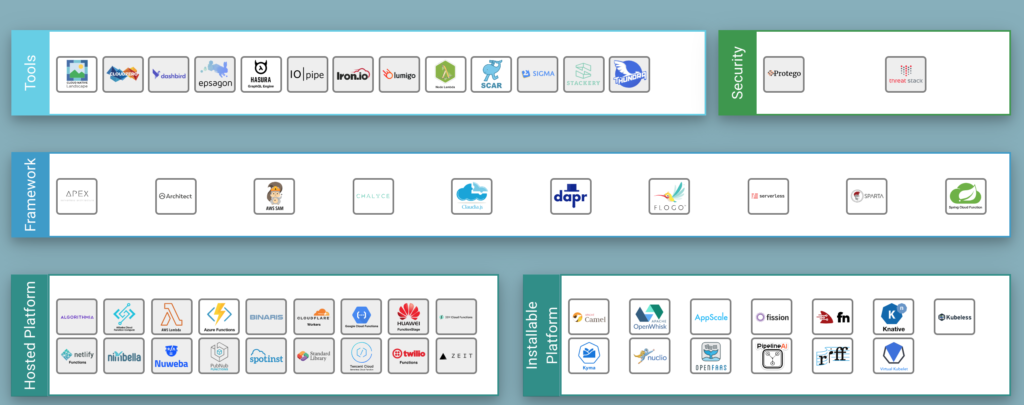

With Serverless Computing, we describe a cloud model in which server management and infrastructure decisions don’t have to be tackled by the developers, but are taken care of by the cloud providers themselves. The term “Serverless” describes the fact that we don’t have to care much about infrastructure setup, scaling, and maintenance and that we can focus on developing code which can easily be deployed into production. The Serverless opportunities seem endless, and so does the landscape map published by the Cloud Native Computing Foundation (CNCF), which you can find here.

Serverless architecture is said to be the next big thing and somewhat the advancement of microservices: Monolith -> Microservices -> Serverless architecture

Function as a Service

One of the most essential Serverless offerings are so-called compute runtimes, also known as function as a service (FaaS) platforms. Many vendors provide these platforms, with the most prominent ones being AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. There are also open-source alternatives, such as Apache OpenWhisk or Oracle’s Fn Project. Many more tools and platforms exist, which are also part of the landscape map shown above.

The general idea behind FaaS is to offer a platform that can be used to execute code triggered by some event. The code can be deployed without maintaining infrastructure and just by uploading functionality to the cloud, which takes care of executing and also scaling the function. “Functions” in the context of FaaS are rather small units that generally should be stateless and can, as a result, easily be scaled horizontally. A FaaS platform will not only scale out your code if it’s under heavy load but also take care of removing instances if the function has not been invoked much for a while. This technique, for starters, helps to optimize costs but also requires awareness of cold start situations. Serverless overall has relevant positive characteristics and should be part of our discussions around reliable architecture alternatives. If you want to learn a bit more about the concepts and what Serverless architecture entails, I recommend watching “Serverless: the Future of Software Architecture” by Peter Sbarski. To be clear, I personally don’t believe that you should go Serverless no matter what, but rather see it as a valid concept that might solve parts of your problems.

OpenFaaS – Containers as Functions

OpenFaaS, as explained on their web site, “makes it simple to turn anything into a Serverless function that runs on Linux or Windows through Docker Swarm or Kubernetes”. They promise that it lets us run any code anywhere at any scale. You could describe OpenFaaS as a “containers as a function” platform since its form of abstracting functions is a Docker image. That characteristic is an excellent trait as it allows us to package any code into a Docker image, and OpenFaaS runs it, scales it, and also provides metrics for us. To be honest, it’s worth mentioning that a specific tool needs to be added to your containers, which is called Function Watchdog, a tiny Golang HTTP server which connects your function with the outside world. OpenFaaS itself runs on, e.g., Kubernetes or Docker Swarm is open source under MIT license, and is written in Go. You can find the GitHub project here.

No vendor lock-in

OpenFaaS relies on Docker images used to package our code, and the tool itself runs on platforms such as Kubernetes. The community has adopted all of these technologies and knows how to use them. As a result, you may move your OpenFaaS instance around to and from any public or private cloud without issue. OpenFaaS does not make you dependent on a particular vendor, which is contrary to what you get when relying on technologies like AWS Lambda. The fact of being dependent on a particular vendor is known as a vendor lock-in.

Other similar projects

The idea of deploying independent containers to a compute engine can be discovered in a few more projects. You can find a comparison of multiple similar tools here.

Serverless Kotlin deployed on OpenFaaS

Now that we’ve learned about the general ideas behind Serverless, FaaS and what OpenFaaS does, we want to get our hands a bit dirty by setting up an OpenFaaS Kubernetes cluster and learning how we can deploy functions to it.

OpenFaaS Deployment using Kubernetes

The following is based on the official guide. Read it to get more information and learn about alternative approaches.

As a first step, you need to make sure to have a Kubernetes cluster set up. If you want to run Kubernetes on a local machine, various tools can help you set up the cluster (e.g. k3s or minikube). On Mac and Windows, you can also use Docker’s desktop edition to run a Kubernetes cluster locally.

After having set up Kubernetes, we can start deploying OpenFaaS to the cluster. It’s incredibly easy using k3sup

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/ # <- this step might not be necessary

chmod +x k3sup

# applies openfaas namespaces, create user, applies helm chart

k3sup app install openfaas

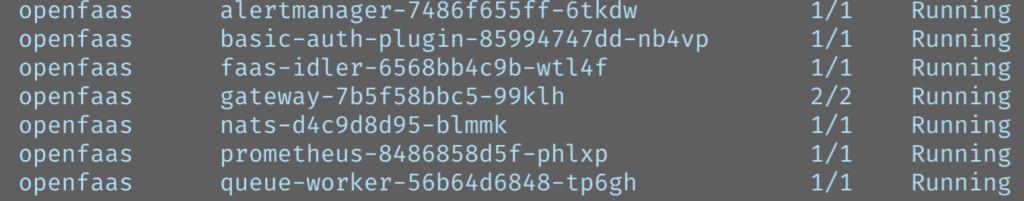

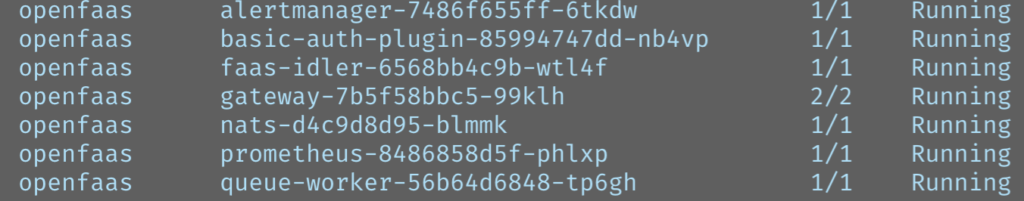

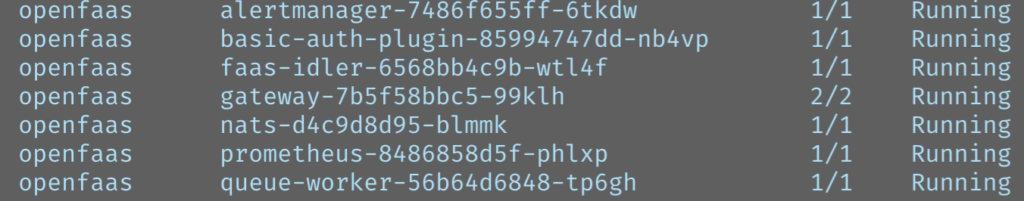

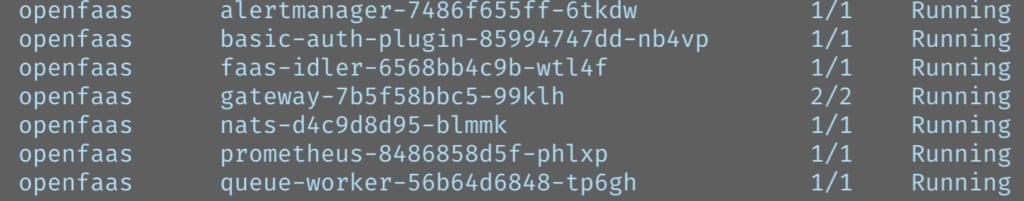

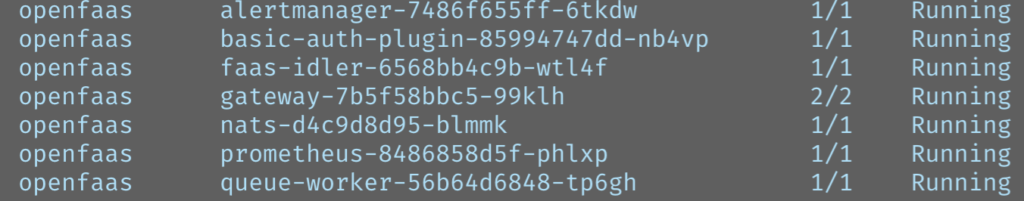

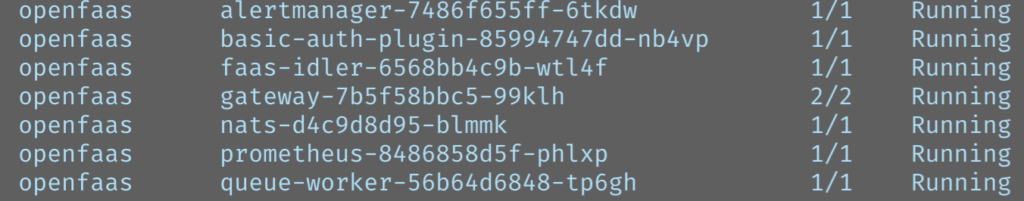

After that, inspecting your cluster, you will find several running OpenFaaS pods.

You should be able to view the gateway UI via http://127.0.0.1:31112/ui/ where you log in using the credentials generated by k3sup. You can get the password via kubectl:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

To allow faas-cli (needs to be installed) to access your newly deployed services, make sure to

1) set OPENFAAS_URL via export OPENFAAS_URL=http://127.0.0.1:31112

2) log in via faas-cli login --password {YOUR_PASSWORD_HERE}

The following script logs you in automatically:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

That’s it – We’re all set and can start deploying Serverless functions. 🎉

Creating and Deploying an OpenFaaS function

OpenFaaS offers different means and interfaces for deploying functions to the platform. We may use the CLI, the gateway UI, or the provided REST API, which is documented via this Swagger yaml.

Templates

The most straightforward way for getting started is via OpenFaaS’ template engine. With the faas-cli, we can create new functions based on available templates existing for various programming languages and tools. To get a list of all existing templates, you should run faas-cli template pull first. Running faas-cli new --list now, you should see a list of templates similar to the following:

csharp csharp-armhf dockerfile dockerfile-armhf go go-armhf java12 java8 node node-arm64 node-armhf node12 php7 python python-armhf python3 python3-armhf ruby

More templates are available via the OpenFaaS Store, which you can examine by running faas-cli template store list. Neither of both sources currently contains a Kotlin template, which I would love to change. Therefore, a change request is awaiting feedback and will hopefully add some official Kotlin to OpenFaaS.

On the bright side, it is quite easy to add my Kotlin templates to the cli by executing faas-cli template pull https://github.com/s1monw1/openfaas-kotlin. You should find two additional Kotlin variants now via faas-cli new --list. Let’s create a classic Kotlin function using the kotlin template:

faas-cli new hello-readers --lang=kotlin

# function can be found in ./hello-readers

By convention, OpenFaaS only exposes a particular part of each template to the user. If you want to look into that further, check out the Kotlin template and see how it is structured. The function is the one we have to deal with after running the faas-cli new command mentioned above.

Modify the template

In the newly generated function hello-readers, we can find a source file Handler.kt, which is the spot where we can implement the HTTP handling for our function. It’s a simple request-response mapper, and to demo it, we simply change the default body to "Hello kotlinexpertise readers".

class Handler : IHandler {

override fun handle(request: IRequest): IResponse {

return Response(body = "Hello kotlinexpertise readers")

}

}

Build and Deploy

Build the function

Next to the hello-readers folder, OpenFaaS should have generated a YAML file called hello-readers.yml. It contains the information the cli needs to build and deploy our function. You can learn more about the relevant YAML structure and how you may want to modify it here. Let’s build it.

# by default, OpenFaaS looks for a stack.yml file which can be adjusted using the -f flag

faas-cli build -f hello-readers.yml

OpenFaaS now runs the Dockerfile contained in the template (not visible to the user) to build the image which we can then deploy. There’s also a faas-cli up command available that wraps the build, push and deploy commands for our convenience. Either way, whether you use faas-cli up or faas-cli deploy, the result looks similar to the following output.

A successful deployment

> faas-cli deploy -f hello-readers.yml

Deploying: hello-readers.

WARNING! Communication is not secure, please consider using HTTPS. Letsencrypt.org offers free SSL/TLS certificates.

Deployed. 202 Accepted.

URL: http://127.0.0.1:31112/function/hello-readers

Clicking that URL reveals the message we previously configured: “Hello kotlinexpertise readers”. You can also examine the deployed function via the gateway UI.

Why would I use Kotlin to develop Serverless?

In the previous section, we saw that we could choose from a wide variety of programming languages to write Serverless functions for OpenFaaS. What language works best for you and makes the most sense depends on the use case and what you’re trying to achieve. Common Serverless languages are, e.g., Node, Python, and Go, but you’ll also find C#, Java, and even more in use.

Having a limited set of programming languages in a company probably leads to better internal tooling and also mitigates the issue of not being able to switch teams easily due to language barriers. I’m not a big fan of having hundreds of services all written in different languages, which most like would become problematic at some point. Most bigger tech companies limit their language portfolio and only add new ones if circumstances demand it.

I personally have been using Kotlin for almost four years now, and it was clear that I would also use it to explore the Serverless space. We at bryter have started using Kotlin Serverless functions not too long ago, and it’s working out well so far. Most of our backend services run Kotlin, and it’s the language we’re most experienced with. Nevertheless, we know that it might become necessary to add more languages, e.g., for performance reasons. The JVM footprint cannot be ignored and might impact performance here and there, for instance, when it comes to cold starts. To tweak things a bit, Graal VM could be a good option.

In a previous post, I wrote about the Server as a Function toolkit http4k, which is a fantastic library to enable Serverless functions in Kotlin. Http4k backs the functions we use at bryter, and I also proposed a corresponding template for the OpenFaaS store.

Serverless tooling for Kotlin

A pretty new framework named kotless (Kotlin Serverless) is currently being developed by Jetbrains with the goal of making it easy to turn Kotlin applications into ready-to-deploy functions and even handle the deployment which currently only works with AWS Lambda. The framework has a few very interesting features and I’m looking forward to see where kotless is heading.

Summary and Lookout

Serverless approaches and related architectural implications are relevant and something we should be aware of when considering approaches to modern software architecture. Functions as a service, as a Serverless related topic, has been around for a while. The big cloud players Amazon, Google, and Microsoft, provide the most prominent tools. Still, we can also get around vendor lock-in by opting to choose more independent platforms like the one I presented to you in this article: OpenFaaS. We saw that setting up OpenFaaS is no rocket science, and it can run on your local machine, which enables an excellent way to get started. You can choose from a big set of programming languages and just need to make sure that your code can be packaged as a Docker image to be able to deploy it to OpenFaaS. Templates can help with setting up functions quickly, and it’s not too hard to write your own function templates if necessary. I have shown an example of a Kotlin template and how the resulting HTTP handler (representing the function) looks like. Kotlin is a valid alternative amongst many others, and you should decide on a case-to-case basis, which programming language best suits your function’s needs. As I mentioned, I’ve been using the presented technologies for a while and currently work on a real-life use case to utilize that stuff to set up a dynamic, highly scalable and expandable platform, which I want to talk more about in upcoming articles.

The post Serverless Kotlin with OpenFaaS appeared first on Kotlin Expertise Blog.

Serverless Kotlin with OpenFaaS

Serverless Kotlin on OpenFaaS

With this article, my goal is to demonstrate how Serverless Kotlin can look like by introducing you to one of the coolest Serverless platforms: OpenFaaS. OpenFaaS is an open-source, community-owned project that you may use to run your functions and microservices on any public or private cloud. You can run your Docker image on OpenFaaS, which runs and scales it for you. As a result, you are free to choose any programming language as long as it can be packaged into a Docker image. Throughout this post, we want to learn about the concepts behind Serverless and Function as a Service (FaaS), and how we can deploy Serverless Kotlin functions to OpenFaaS.

Serverless and Function as a Service

Serverless Computing

With Serverless Computing, we describe a cloud model in which server management and infrastructure decisions don’t have to be tackled by the developers, but are taken care of by the cloud providers themselves. The term “Serverless” describes the fact that we don’t have to care much about infrastructure setup, scaling, and maintenance and that we can focus on developing code which can easily be deployed into production. The Serverless opportunities seem endless, and so does the landscape map published by the Cloud Native Computing Foundation (CNCF), which you can find here.

Serverless architecture is said to be the next big thing and somewhat the advancement of microservices: Monolith -> Microservices -> Serverless architecture

Function as a Service

One of the most essential Serverless offerings are so-called compute runtimes, also known as function as a service (FaaS) platforms. Many vendors provide these platforms, with the most prominent ones being AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. There are also open-source alternatives, such as Apache OpenWhisk or Oracle’s Fn Project. Many more tools and platforms exist, which are also part of the landscape map shown above.

The general idea behind FaaS is to offer a platform that can be used to execute code triggered by some event. The code can be deployed without maintaining infrastructure and just by uploading functionality to the cloud, which takes care of executing and also scaling the function. “Functions” in the context of FaaS are rather small units that generally should be stateless and can, as a result, easily be scaled horizontally. A FaaS platform will not only scale out your code if it’s under heavy load but also take care of removing instances if the function has not been invoked much for a while. This technique, for starters, helps to optimize costs but also requires awareness of cold start situations. Serverless overall has relevant positive characteristics and should be part of our discussions around reliable architecture alternatives. If you want to learn a bit more about the concepts and what Serverless architecture entails, I recommend watching “Serverless: the Future of Software Architecture” by Peter Sbarski. To be clear, I personally don’t believe that you should go Serverless no matter what, but rather see it as a valid concept that might solve parts of your problems.

OpenFaaS – Containers as Functions

OpenFaaS, as explained on their web site, “makes it simple to turn anything into a Serverless function that runs on Linux or Windows through Docker Swarm or Kubernetes”. They promise that it lets us run any code anywhere at any scale. You could describe OpenFaaS as a “containers as a function” platform since its form of abstracting functions is a Docker image. That characteristic is an excellent trait as it allows us to package any code into a Docker image, and OpenFaaS runs it, scales it, and also provides metrics for us. To be honest, it’s worth mentioning that a specific tool needs to be added to your containers, which is called Function Watchdog, a tiny Golang HTTP server which connects your function with the outside world. OpenFaaS itself runs on, e.g., Kubernetes or Docker Swarm is open source under MIT license, and is written in Go. You can find the GitHub project here.

No vendor lock-in

OpenFaaS relies on Docker images used to package our code, and the tool itself runs on platforms such as Kubernetes. The community has adopted all of these technologies and knows how to use them. As a result, you may move your OpenFaaS instance around to and from any public or private cloud without issue. OpenFaaS does not make you dependent on a particular vendor, which is contrary to what you get when relying on technologies like AWS Lambda. The fact of being dependent on a particular vendor is known as a vendor lock-in.

Other similar projects

The idea of deploying independent containers to a compute engine can be discovered in a few more projects. You can find a comparison of multiple similar tools here.

Serverless Kotlin deployed on OpenFaaS

Now that we’ve learned about the general ideas behind Serverless, FaaS and what OpenFaaS does, we want to get our hands a bit dirty by setting up an OpenFaaS Kubernetes cluster and learning how we can deploy functions to it.

OpenFaaS Deployment using Kubernetes

The following is based on the official guide. Read it to get more information and learn about alternative approaches.

As a first step, you need to make sure to have a Kubernetes cluster set up. If you want to run Kubernetes on a local machine, various tools can help you set up the cluster (e.g. k3s or minikube). On Mac and Windows, you can also use Docker’s desktop edition to run a Kubernetes cluster locally.

After having set up Kubernetes, we can start deploying OpenFaaS to the cluster. It’s incredibly easy using k3sup

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/ # <- this step might not be necessary

chmod +x k3sup

# applies openfaas namespaces, create user, applies helm chart

k3sup app install openfaas

After that, inspecting your cluster, you will find several running OpenFaaS pods.

You should be able to view the gateway UI via http://127.0.0.1:31112/ui/ where you log in using the credentials generated by k3sup. You can get the password via kubectl:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

To allow faas-cli (needs to be installed) to access your newly deployed services, make sure to

1) set OPENFAAS_URL via export OPENFAAS_URL=http://127.0.0.1:31112

2) log in via faas-cli login --password {YOUR_PASSWORD_HERE}

The following script logs you in automatically:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

That’s it – We’re all set and can start deploying Serverless functions. 🎉

Creating and Deploying an OpenFaaS function

OpenFaaS offers different means and interfaces for deploying functions to the platform. We may use the CLI, the gateway UI, or the provided REST API, which is documented via this Swagger yaml.

Templates

The most straightforward way for getting started is via OpenFaaS’ template engine. With the faas-cli, we can create new functions based on available templates existing for various programming languages and tools. To get a list of all existing templates, you should run faas-cli template pull first. Running faas-cli new --list now, you should see a list of templates similar to the following:

csharp csharp-armhf dockerfile dockerfile-armhf go go-armhf java12 java8 node node-arm64 node-armhf node12 php7 python python-armhf python3 python3-armhf ruby

More templates are available via the OpenFaaS Store, which you can examine by running faas-cli template store list. Neither of both sources currently contains a Kotlin template, which I would love to change. Therefore, a change request is awaiting feedback and will hopefully add some official Kotlin to OpenFaaS.

On the bright side, it is quite easy to add my Kotlin templates to the cli by executing faas-cli template pull https://github.com/s1monw1/openfaas-kotlin. You should find two additional Kotlin variants now via faas-cli new --list. Let’s create a classic Kotlin function using the kotlin template:

faas-cli new hello-readers --lang=kotlin

# function can be found in ./hello-readers

By convention, OpenFaaS only exposes a particular part of each template to the user. If you want to look into that further, check out the Kotlin template and see how it is structured. The function is the one we have to deal with after running the faas-cli new command mentioned above.

Modify the template

In the newly generated function hello-readers, we can find a source file Handler.kt, which is the spot where we can implement the HTTP handling for our function. It’s a simple request-response mapper, and to demo it, we simply change the default body to "Hello kotlinexpertise readers".

class Handler : IHandler {

override fun handle(request: IRequest): IResponse {

return Response(body = "Hello kotlinexpertise readers")

}

}

Build and Deploy

Build the function

Next to the hello-readers folder, OpenFaaS should have generated a YAML file called hello-readers.yml. It contains the information the cli needs to build and deploy our function. You can learn more about the relevant YAML structure and how you may want to modify it here. Let’s build it.

# by default, OpenFaaS looks for a stack.yml file which can be adjusted using the -f flag

faas-cli build -f hello-readers.yml

OpenFaaS now runs the Dockerfile contained in the template (not visible to the user) to build the image which we can then deploy. There’s also a faas-cli up command available that wraps the build, push and deploy commands for our convenience. Either way, whether you use faas-cli up or faas-cli deploy, the result looks similar to the following output.

A successful deployment

> faas-cli deploy -f hello-readers.yml

Deploying: hello-readers.

WARNING! Communication is not secure, please consider using HTTPS. Letsencrypt.org offers free SSL/TLS certificates.

Deployed. 202 Accepted.

URL: http://127.0.0.1:31112/function/hello-readers

Clicking that URL reveals the message we previously configured: “Hello kotlinexpertise readers”. You can also examine the deployed function via the gateway UI.

Why would I use Kotlin to develop Serverless?

In the previous section, we saw that we could choose from a wide variety of programming languages to write Serverless functions for OpenFaaS. What language works best for you and makes the most sense depends on the use case and what you’re trying to achieve. Common Serverless languages are, e.g., Node, Python, and Go, but you’ll also find C#, Java, and even more in use.

Having a limited set of programming languages in a company probably leads to better internal tooling and also mitigates the issue of not being able to switch teams easily due to language barriers. I’m not a big fan of having hundreds of services all written in different languages, which most like would become problematic at some point. Most bigger tech companies limit their language portfolio and only add new ones if circumstances demand it.

I personally have been using Kotlin for almost four years now, and it was clear that I would also use it to explore the Serverless space. We at bryter have started using Kotlin Serverless functions not too long ago, and it’s working out well so far. Most of our backend services run Kotlin, and it’s the language we’re most experienced with. Nevertheless, we know that it might become necessary to add more languages, e.g., for performance reasons. The JVM footprint cannot be ignored and might impact performance here and there, for instance, when it comes to cold starts. To tweak things a bit, Graal VM could be a good option.

In a previous post, I wrote about the Server as a Function toolkit http4k, which is a fantastic library to enable Serverless functions in Kotlin. Http4k backs the functions we use at bryter, and I also proposed a corresponding template for the OpenFaaS store.

Serverless tooling for Kotlin

A pretty new framework named kotless (Kotlin Serverless) is currently being developed by Jetbrains with the goal of making it easy to turn Kotlin applications into ready-to-deploy functions and even handle the deployment which currently only works with AWS Lambda. The framework has a few very interesting features and I’m looking forward to see where kotless is heading.

Summary and Lookout

Serverless approaches and related architectural implications are relevant and something we should be aware of when considering approaches to modern software architecture. Functions as a service, as a Serverless related topic, has been around for a while. The big cloud players Amazon, Google, and Microsoft, provide the most prominent tools. Still, we can also get around vendor lock-in by opting to choose more independent platforms like the one I presented to you in this article: OpenFaaS. We saw that setting up OpenFaaS is no rocket science, and it can run on your local machine, which enables an excellent way to get started. You can choose from a big set of programming languages and just need to make sure that your code can be packaged as a Docker image to be able to deploy it to OpenFaaS. Templates can help with setting up functions quickly, and it’s not too hard to write your own function templates if necessary. I have shown an example of a Kotlin template and how the resulting HTTP handler (representing the function) looks like. Kotlin is a valid alternative amongst many others, and you should decide on a case-to-case basis, which programming language best suits your function’s needs. As I mentioned, I’ve been using the presented technologies for a while and currently work on a real-life use case to utilize that stuff to set up a dynamic, highly scalable and expandable platform, which I want to talk more about in upcoming articles.

The post Serverless Kotlin with OpenFaaS appeared first on Kotlin Expertise Blog.

Serverless Kotlin with OpenFaaS

Serverless Kotlin on OpenFaaS

With this article, my goal is to demonstrate how Serverless Kotlin can look like by introducing you to one of the coolest Serverless platforms: OpenFaaS. OpenFaaS is an open-source, community-owned project that you may use to run your functions and microservices on any public or private cloud. You can run your Docker image on OpenFaaS, which runs and scales it for you. As a result, you are free to choose any programming language as long as it can be packaged into a Docker image. Throughout this post, we want to learn about the concepts behind Serverless and Function as a Service (FaaS), and how we can deploy Serverless Kotlin functions to OpenFaaS.

Serverless and Function as a Service

Serverless Computing

With Serverless Computing, we describe a cloud model in which server management and infrastructure decisions don’t have to be tackled by the developers, but are taken care of by the cloud providers themselves. The term “Serverless” describes the fact that we don’t have to care much about infrastructure setup, scaling, and maintenance and that we can focus on developing code which can easily be deployed into production. The Serverless opportunities seem endless, and so does the landscape map published by the Cloud Native Computing Foundation (CNCF), which you can find here.

Serverless architecture is said to be the next big thing and somewhat the advancement of microservices: Monolith -> Microservices -> Serverless architecture

Function as a Service

One of the most essential Serverless offerings are so-called compute runtimes, also known as function as a service (FaaS) platforms. Many vendors provide these platforms, with the most prominent ones being AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. There are also open-source alternatives, such as Apache OpenWhisk or Oracle’s Fn Project. Many more tools and platforms exist, which are also part of the landscape map shown above.

The general idea behind FaaS is to offer a platform that can be used to execute code triggered by some event. The code can be deployed without maintaining infrastructure and just by uploading functionality to the cloud, which takes care of executing and also scaling the function. “Functions” in the context of FaaS are rather small units that generally should be stateless and can, as a result, easily be scaled horizontally. A FaaS platform will not only scale out your code if it’s under heavy load but also take care of removing instances if the function has not been invoked much for a while. This technique, for starters, helps to optimize costs but also requires awareness of cold start situations. Serverless overall has relevant positive characteristics and should be part of our discussions around reliable architecture alternatives. If you want to learn a bit more about the concepts and what Serverless architecture entails, I recommend watching “Serverless: the Future of Software Architecture” by Peter Sbarski. To be clear, I personally don’t believe that you should go Serverless no matter what, but rather see it as a valid concept that might solve parts of your problems.

OpenFaaS – Containers as Functions

OpenFaaS, as explained on their web site, “makes it simple to turn anything into a Serverless function that runs on Linux or Windows through Docker Swarm or Kubernetes”. They promise that it lets us run any code anywhere at any scale. You could describe OpenFaaS as a “containers as a function” platform since its form of abstracting functions is a Docker image. That characteristic is an excellent trait as it allows us to package any code into a Docker image, and OpenFaaS runs it, scales it, and also provides metrics for us. To be honest, it’s worth mentioning that a specific tool needs to be added to your containers, which is called Function Watchdog, a tiny Golang HTTP server which connects your function with the outside world. OpenFaaS itself runs on, e.g., Kubernetes or Docker Swarm is open source under MIT license, and is written in Go. You can find the GitHub project here.

No vendor lock-in

OpenFaaS relies on Docker images used to package our code, and the tool itself runs on platforms such as Kubernetes. The community has adopted all of these technologies and knows how to use them. As a result, you may move your OpenFaaS instance around to and from any public or private cloud without issue. OpenFaaS does not make you dependent on a particular vendor, which is contrary to what you get when relying on technologies like AWS Lambda. The fact of being dependent on a particular vendor is known as a vendor lock-in.

Other similar projects

The idea of deploying independent containers to a compute engine can be discovered in a few more projects. You can find a comparison of multiple similar tools here.

Serverless Kotlin deployed on OpenFaaS

Now that we’ve learned about the general ideas behind Serverless, FaaS and what OpenFaaS does, we want to get our hands a bit dirty by setting up an OpenFaaS Kubernetes cluster and learning how we can deploy functions to it.

OpenFaaS Deployment using Kubernetes

The following is based on the official guide. Read it to get more information and learn about alternative approaches.

As a first step, you need to make sure to have a Kubernetes cluster set up. If you want to run Kubernetes on a local machine, various tools can help you set up the cluster (e.g. k3s or minikube). On Mac and Windows, you can also use Docker’s desktop edition to run a Kubernetes cluster locally.

After having set up Kubernetes, we can start deploying OpenFaaS to the cluster. It’s incredibly easy using k3sup

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/ # <- this step might not be necessary

chmod +x k3sup

# applies openfaas namespaces, create user, applies helm chart

k3sup app install openfaas

After that, inspecting your cluster, you will find several running OpenFaaS pods.

You should be able to view the gateway UI via http://127.0.0.1:31112/ui/ where you log in using the credentials generated by k3sup. You can get the password via kubectl:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

To allow faas-cli (needs to be installed) to access your newly deployed services, make sure to

1) set OPENFAAS_URL via export OPENFAAS_URL=http://127.0.0.1:31112

2) log in via faas-cli login --password {YOUR_PASSWORD_HERE}

The following script logs you in automatically:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

That’s it – We’re all set and can start deploying Serverless functions. 🎉

Creating and Deploying an OpenFaaS function

OpenFaaS offers different means and interfaces for deploying functions to the platform. We may use the CLI, the gateway UI, or the provided REST API, which is documented via this Swagger yaml.

Templates

The most straightforward way for getting started is via OpenFaaS’ template engine. With the faas-cli, we can create new functions based on available templates existing for various programming languages and tools. To get a list of all existing templates, you should run faas-cli template pull first. Running faas-cli new --list now, you should see a list of templates similar to the following:

csharp csharp-armhf dockerfile dockerfile-armhf go go-armhf java12 java8 node node-arm64 node-armhf node12 php7 python python-armhf python3 python3-armhf ruby

More templates are available via the OpenFaaS Store, which you can examine by running faas-cli template store list. Neither of both sources currently contains a Kotlin template, which I would love to change. Therefore, a change request is awaiting feedback and will hopefully add some official Kotlin to OpenFaaS.

On the bright side, it is quite easy to add my Kotlin templates to the cli by executing faas-cli template pull https://github.com/s1monw1/openfaas-kotlin. You should find two additional Kotlin variants now via faas-cli new --list. Let’s create a classic Kotlin function using the kotlin template:

faas-cli new hello-readers --lang=kotlin

# function can be found in ./hello-readers

By convention, OpenFaaS only exposes a particular part of each template to the user. If you want to look into that further, check out the Kotlin template and see how it is structured. The function is the one we have to deal with after running the faas-cli new command mentioned above.

Modify the template

In the newly generated function hello-readers, we can find a source file Handler.kt, which is the spot where we can implement the HTTP handling for our function. It’s a simple request-response mapper, and to demo it, we simply change the default body to "Hello kotlinexpertise readers".

class Handler : IHandler {

override fun handle(request: IRequest): IResponse {

return Response(body = "Hello kotlinexpertise readers")

}

}

Build and Deploy

Build the function

Next to the hello-readers folder, OpenFaaS should have generated a YAML file called hello-readers.yml. It contains the information the cli needs to build and deploy our function. You can learn more about the relevant YAML structure and how you may want to modify it here. Let’s build it.

# by default, OpenFaaS looks for a stack.yml file which can be adjusted using the -f flag

faas-cli build -f hello-readers.yml

OpenFaaS now runs the Dockerfile contained in the template (not visible to the user) to build the image which we can then deploy. There’s also a faas-cli up command available that wraps the build, push and deploy commands for our convenience. Either way, whether you use faas-cli up or faas-cli deploy, the result looks similar to the following output.

A successful deployment

> faas-cli deploy -f hello-readers.yml

Deploying: hello-readers.

WARNING! Communication is not secure, please consider using HTTPS. Letsencrypt.org offers free SSL/TLS certificates.

Deployed. 202 Accepted.

URL: http://127.0.0.1:31112/function/hello-readers

Clicking that URL reveals the message we previously configured: “Hello kotlinexpertise readers”. You can also examine the deployed function via the gateway UI.

Why would I use Kotlin to develop Serverless?

In the previous section, we saw that we could choose from a wide variety of programming languages to write Serverless functions for OpenFaaS. What language works best for you and makes the most sense depends on the use case and what you’re trying to achieve. Common Serverless languages are, e.g., Node, Python, and Go, but you’ll also find C#, Java, and even more in use.

Having a limited set of programming languages in a company probably leads to better internal tooling and also mitigates the issue of not being able to switch teams easily due to language barriers. I’m not a big fan of having hundreds of services all written in different languages, which most like would become problematic at some point. Most bigger tech companies limit their language portfolio and only add new ones if circumstances demand it.

I personally have been using Kotlin for almost four years now, and it was clear that I would also use it to explore the Serverless space. We at bryter have started using Kotlin Serverless functions not too long ago, and it’s working out well so far. Most of our backend services run Kotlin, and it’s the language we’re most experienced with. Nevertheless, we know that it might become necessary to add more languages, e.g., for performance reasons. The JVM footprint cannot be ignored and might impact performance here and there, for instance, when it comes to cold starts. To tweak things a bit, Graal VM could be a good option.

In a previous post, I wrote about the Server as a Function toolkit http4k, which is a fantastic library to enable Serverless functions in Kotlin. Http4k backs the functions we use at bryter, and I also proposed a corresponding template for the OpenFaaS store.

Serverless tooling for Kotlin

A pretty new framework named kotless (Kotlin Serverless) is currently being developed by Jetbrains with the goal of making it easy to turn Kotlin applications into ready-to-deploy functions and even handle the deployment which currently only works with AWS Lambda. The framework has a few very interesting features and I’m looking forward to see where kotless is heading.

Summary and Lookout

Serverless approaches and related architectural implications are relevant and something we should be aware of when considering approaches to modern software architecture. Functions as a service, as a Serverless related topic, has been around for a while. The big cloud players Amazon, Google, and Microsoft, provide the most prominent tools. Still, we can also get around vendor lock-in by opting to choose more independent platforms like the one I presented to you in this article: OpenFaaS. We saw that setting up OpenFaaS is no rocket science, and it can run on your local machine, which enables an excellent way to get started. You can choose from a big set of programming languages and just need to make sure that your code can be packaged as a Docker image to be able to deploy it to OpenFaaS. Templates can help with setting up functions quickly, and it’s not too hard to write your own function templates if necessary. I have shown an example of a Kotlin template and how the resulting HTTP handler (representing the function) looks like. Kotlin is a valid alternative amongst many others, and you should decide on a case-to-case basis, which programming language best suits your function’s needs. As I mentioned, I’ve been using the presented technologies for a while and currently work on a real-life use case to utilize that stuff to set up a dynamic, highly scalable and expandable platform, which I want to talk more about in upcoming articles.

The post Serverless Kotlin with OpenFaaS appeared first on Kotlin Expertise Blog.

Serverless Kotlin with OpenFaaS

Serverless Kotlin on OpenFaaS

With this article, my goal is to demonstrate how Serverless Kotlin can look like by introducing you to one of the coolest Serverless platforms: OpenFaaS. OpenFaaS is an open-source, community-owned project that you may use to run your functions and microservices on any public or private cloud. You can run your Docker image on OpenFaaS, which runs and scales it for you. As a result, you are free to choose any programming language as long as it can be packaged into a Docker image. Throughout this post, we want to learn about the concepts behind Serverless and Function as a Service (FaaS), and how we can deploy Serverless Kotlin functions to OpenFaaS.

Serverless and Function as a Service

Serverless Computing

With Serverless Computing, we describe a cloud model in which server management and infrastructure decisions don’t have to be tackled by the developers, but are taken care of by the cloud providers themselves. The term “Serverless” describes the fact that we don’t have to care much about infrastructure setup, scaling, and maintenance and that we can focus on developing code which can easily be deployed into production. The Serverless opportunities seem endless, and so does the landscape map published by the Cloud Native Computing Foundation (CNCF), which you can find here.

Serverless architecture is said to be the next big thing and somewhat the advancement of microservices: Monolith -> Microservices -> Serverless architecture

Function as a Service

One of the most essential Serverless offerings are so-called compute runtimes, also known as function as a service (FaaS) platforms. Many vendors provide these platforms, with the most prominent ones being AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. There are also open-source alternatives, such as Apache OpenWhisk or Oracle’s Fn Project. Many more tools and platforms exist, which are also part of the landscape map shown above.

The general idea behind FaaS is to offer a platform that can be used to execute code triggered by some event. The code can be deployed without maintaining infrastructure and just by uploading functionality to the cloud, which takes care of executing and also scaling the function. “Functions” in the context of FaaS are rather small units that generally should be stateless and can, as a result, easily be scaled horizontally. A FaaS platform will not only scale out your code if it’s under heavy load but also take care of removing instances if the function has not been invoked much for a while. This technique, for starters, helps to optimize costs but also requires awareness of cold start situations. Serverless overall has relevant positive characteristics and should be part of our discussions around reliable architecture alternatives. If you want to learn a bit more about the concepts and what Serverless architecture entails, I recommend watching “Serverless: the Future of Software Architecture” by Peter Sbarski. To be clear, I personally don’t believe that you should go Serverless no matter what, but rather see it as a valid concept that might solve parts of your problems.

OpenFaaS – Containers as Functions

OpenFaaS, as explained on their web site, “makes it simple to turn anything into a Serverless function that runs on Linux or Windows through Docker Swarm or Kubernetes”. They promise that it lets us run any code anywhere at any scale. You could describe OpenFaaS as a “containers as a function” platform since its form of abstracting functions is a Docker image. That characteristic is an excellent trait as it allows us to package any code into a Docker image, and OpenFaaS runs it, scales it, and also provides metrics for us. To be honest, it’s worth mentioning that a specific tool needs to be added to your containers, which is called Function Watchdog, a tiny Golang HTTP server which connects your function with the outside world. OpenFaaS itself runs on, e.g., Kubernetes or Docker Swarm is open source under MIT license, and is written in Go. You can find the GitHub project here.

No vendor lock-in

OpenFaaS relies on Docker images used to package our code, and the tool itself runs on platforms such as Kubernetes. The community has adopted all of these technologies and knows how to use them. As a result, you may move your OpenFaaS instance around to and from any public or private cloud without issue. OpenFaaS does not make you dependent on a particular vendor, which is contrary to what you get when relying on technologies like AWS Lambda. The fact of being dependent on a particular vendor is known as a vendor lock-in.

Other similar projects

The idea of deploying independent containers to a compute engine can be discovered in a few more projects. You can find a comparison of multiple similar tools here.

Serverless Kotlin deployed on OpenFaaS

Now that we’ve learned about the general ideas behind Serverless, FaaS and what OpenFaaS does, we want to get our hands a bit dirty by setting up an OpenFaaS Kubernetes cluster and learning how we can deploy functions to it.

OpenFaaS Deployment using Kubernetes

The following is based on the official guide. Read it to get more information and learn about alternative approaches.

As a first step, you need to make sure to have a Kubernetes cluster set up. If you want to run Kubernetes on a local machine, various tools can help you set up the cluster (e.g. k3s or minikube). On Mac and Windows, you can also use Docker’s desktop edition to run a Kubernetes cluster locally.

After having set up Kubernetes, we can start deploying OpenFaaS to the cluster. It’s incredibly easy using k3sup

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/ # <- this step might not be necessary

chmod +x k3sup

# applies openfaas namespaces, create user, applies helm chart

k3sup app install openfaas

After that, inspecting your cluster, you will find several running OpenFaaS pods.

You should be able to view the gateway UI via http://127.0.0.1:31112/ui/ where you log in using the credentials generated by k3sup. You can get the password via kubectl:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

To allow faas-cli (needs to be installed) to access your newly deployed services, make sure to

1) set OPENFAAS_URL via export OPENFAAS_URL=http://127.0.0.1:31112

2) log in via faas-cli login --password {YOUR_PASSWORD_HERE}

The following script logs you in automatically:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

That’s it – We’re all set and can start deploying Serverless functions. 🎉

Creating and Deploying an OpenFaaS function

OpenFaaS offers different means and interfaces for deploying functions to the platform. We may use the CLI, the gateway UI, or the provided REST API, which is documented via this Swagger yaml.

Templates

The most straightforward way for getting started is via OpenFaaS’ template engine. With the faas-cli, we can create new functions based on available templates existing for various programming languages and tools. To get a list of all existing templates, you should run faas-cli template pull first. Running faas-cli new --list now, you should see a list of templates similar to the following:

csharp csharp-armhf dockerfile dockerfile-armhf go go-armhf java12 java8 node node-arm64 node-armhf node12 php7 python python-armhf python3 python3-armhf ruby

More templates are available via the OpenFaaS Store, which you can examine by running faas-cli template store list. Neither of both sources currently contains a Kotlin template, which I would love to change. Therefore, a change request is awaiting feedback and will hopefully add some official Kotlin to OpenFaaS.

On the bright side, it is quite easy to add my Kotlin templates to the cli by executing faas-cli template pull https://github.com/s1monw1/openfaas-kotlin. You should find two additional Kotlin variants now via faas-cli new --list. Let’s create a classic Kotlin function using the kotlin template:

faas-cli new hello-readers --lang=kotlin

# function can be found in ./hello-readers

By convention, OpenFaaS only exposes a particular part of each template to the user. If you want to look into that further, check out the Kotlin template and see how it is structured. The function is the one we have to deal with after running the faas-cli new command mentioned above.

Modify the template

In the newly generated function hello-readers, we can find a source file Handler.kt, which is the spot where we can implement the HTTP handling for our function. It’s a simple request-response mapper, and to demo it, we simply change the default body to "Hello kotlinexpertise readers".

class Handler : IHandler {

override fun handle(request: IRequest): IResponse {

return Response(body = "Hello kotlinexpertise readers")

}

}

Build and Deploy

Build the function

Next to the hello-readers folder, OpenFaaS should have generated a YAML file called hello-readers.yml. It contains the information the cli needs to build and deploy our function. You can learn more about the relevant YAML structure and how you may want to modify it here. Let’s build it.

# by default, OpenFaaS looks for a stack.yml file which can be adjusted using the -f flag

faas-cli build -f hello-readers.yml

OpenFaaS now runs the Dockerfile contained in the template (not visible to the user) to build the image which we can then deploy. There’s also a faas-cli up command available that wraps the build, push and deploy commands for our convenience. Either way, whether you use faas-cli up or faas-cli deploy, the result looks similar to the following output.

A successful deployment

> faas-cli deploy -f hello-readers.yml

Deploying: hello-readers.

WARNING! Communication is not secure, please consider using HTTPS. Letsencrypt.org offers free SSL/TLS certificates.

Deployed. 202 Accepted.

URL: http://127.0.0.1:31112/function/hello-readers

Clicking that URL reveals the message we previously configured: “Hello kotlinexpertise readers”. You can also examine the deployed function via the gateway UI.

Why would I use Kotlin to develop Serverless?

In the previous section, we saw that we could choose from a wide variety of programming languages to write Serverless functions for OpenFaaS. What language works best for you and makes the most sense depends on the use case and what you’re trying to achieve. Common Serverless languages are, e.g., Node, Python, and Go, but you’ll also find C#, Java, and even more in use.

Having a limited set of programming languages in a company probably leads to better internal tooling and also mitigates the issue of not being able to switch teams easily due to language barriers. I’m not a big fan of having hundreds of services all written in different languages, which most like would become problematic at some point. Most bigger tech companies limit their language portfolio and only add new ones if circumstances demand it.

I personally have been using Kotlin for almost four years now, and it was clear that I would also use it to explore the Serverless space. We at bryter have started using Kotlin Serverless functions not too long ago, and it’s working out well so far. Most of our backend services run Kotlin, and it’s the language we’re most experienced with. Nevertheless, we know that it might become necessary to add more languages, e.g., for performance reasons. The JVM footprint cannot be ignored and might impact performance here and there, for instance, when it comes to cold starts. To tweak things a bit, Graal VM could be a good option.

In a previous post, I wrote about the Server as a Function toolkit http4k, which is a fantastic library to enable Serverless functions in Kotlin. Http4k backs the functions we use at bryter, and I also proposed a corresponding template for the OpenFaaS store.

Serverless tooling for Kotlin

A pretty new framework named kotless (Kotlin Serverless) is currently being developed by Jetbrains with the goal of making it easy to turn Kotlin applications into ready-to-deploy functions and even handle the deployment which currently only works with AWS Lambda. The framework has a few very interesting features and I’m looking forward to see where kotless is heading.

Summary and Lookout

Serverless approaches and related architectural implications are relevant and something we should be aware of when considering approaches to modern software architecture. Functions as a service, as a Serverless related topic, has been around for a while. The big cloud players Amazon, Google, and Microsoft, provide the most prominent tools. Still, we can also get around vendor lock-in by opting to choose more independent platforms like the one I presented to you in this article: OpenFaaS. We saw that setting up OpenFaaS is no rocket science, and it can run on your local machine, which enables an excellent way to get started. You can choose from a big set of programming languages and just need to make sure that your code can be packaged as a Docker image to be able to deploy it to OpenFaaS. Templates can help with setting up functions quickly, and it’s not too hard to write your own function templates if necessary. I have shown an example of a Kotlin template and how the resulting HTTP handler (representing the function) looks like. Kotlin is a valid alternative amongst many others, and you should decide on a case-to-case basis, which programming language best suits your function’s needs. As I mentioned, I’ve been using the presented technologies for a while and currently work on a real-life use case to utilize that stuff to set up a dynamic, highly scalable and expandable platform, which I want to talk more about in upcoming articles.

The post Serverless Kotlin with OpenFaaS appeared first on Kotlin Expertise Blog.

Serverless Kotlin with OpenFaaS

Serverless Kotlin on OpenFaaS

With this article, my goal is to demonstrate how Serverless Kotlin can look like by introducing you to one of the coolest Serverless platforms: OpenFaaS. OpenFaaS is an open-source, community-owned project that you may use to run your functions and microservices on any public or private cloud. You can run your Docker image on OpenFaaS, which runs and scales it for you. As a result, you are free to choose any programming language as long as it can be packaged into a Docker image. Throughout this post, we want to learn about the concepts behind Serverless and Function as a Service (FaaS), and how we can deploy Serverless Kotlin functions to OpenFaaS.

Serverless and Function as a Service

Serverless Computing

With Serverless Computing, we describe a cloud model in which server management and infrastructure decisions don’t have to be tackled by the developers, but are taken care of by the cloud providers themselves. The term “Serverless” describes the fact that we don’t have to care much about infrastructure setup, scaling, and maintenance and that we can focus on developing code which can easily be deployed into production. The Serverless opportunities seem endless, and so does the landscape map published by the Cloud Native Computing Foundation (CNCF), which you can find here.

Serverless architecture is said to be the next big thing and somewhat the advancement of microservices: Monolith -> Microservices -> Serverless architecture

Function as a Service

One of the most essential Serverless offerings are so-called compute runtimes, also known as function as a service (FaaS) platforms. Many vendors provide these platforms, with the most prominent ones being AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. There are also open-source alternatives, such as Apache OpenWhisk or Oracle’s Fn Project. Many more tools and platforms exist, which are also part of the landscape map shown above.

The general idea behind FaaS is to offer a platform that can be used to execute code triggered by some event. The code can be deployed without maintaining infrastructure and just by uploading functionality to the cloud, which takes care of executing and also scaling the function. “Functions” in the context of FaaS are rather small units that generally should be stateless and can, as a result, easily be scaled horizontally. A FaaS platform will not only scale out your code if it’s under heavy load but also take care of removing instances if the function has not been invoked much for a while. This technique, for starters, helps to optimize costs but also requires awareness of cold start situations. Serverless overall has relevant positive characteristics and should be part of our discussions around reliable architecture alternatives. If you want to learn a bit more about the concepts and what Serverless architecture entails, I recommend watching “Serverless: the Future of Software Architecture” by Peter Sbarski. To be clear, I personally don’t believe that you should go Serverless no matter what, but rather see it as a valid concept that might solve parts of your problems.

OpenFaaS – Containers as Functions

OpenFaaS, as explained on their web site, “makes it simple to turn anything into a Serverless function that runs on Linux or Windows through Docker Swarm or Kubernetes”. They promise that it lets us run any code anywhere at any scale. You could describe OpenFaaS as a “containers as a function” platform since its form of abstracting functions is a Docker image. That characteristic is an excellent trait as it allows us to package any code into a Docker image, and OpenFaaS runs it, scales it, and also provides metrics for us. To be honest, it’s worth mentioning that a specific tool needs to be added to your containers, which is called Function Watchdog, a tiny Golang HTTP server which connects your function with the outside world. OpenFaaS itself runs on, e.g., Kubernetes or Docker Swarm is open source under MIT license, and is written in Go. You can find the GitHub project here.

No vendor lock-in

OpenFaaS relies on Docker images used to package our code, and the tool itself runs on platforms such as Kubernetes. The community has adopted all of these technologies and knows how to use them. As a result, you may move your OpenFaaS instance around to and from any public or private cloud without issue. OpenFaaS does not make you dependent on a particular vendor, which is contrary to what you get when relying on technologies like AWS Lambda. The fact of being dependent on a particular vendor is known as a vendor lock-in.

Other similar projects

The idea of deploying independent containers to a compute engine can be discovered in a few more projects. You can find a comparison of multiple similar tools here.

Serverless Kotlin deployed on OpenFaaS

Now that we’ve learned about the general ideas behind Serverless, FaaS and what OpenFaaS does, we want to get our hands a bit dirty by setting up an OpenFaaS Kubernetes cluster and learning how we can deploy functions to it.

OpenFaaS Deployment using Kubernetes

The following is based on the official guide. Read it to get more information and learn about alternative approaches.

As a first step, you need to make sure to have a Kubernetes cluster set up. If you want to run Kubernetes on a local machine, various tools can help you set up the cluster (e.g. k3s or minikube). On Mac and Windows, you can also use Docker’s desktop edition to run a Kubernetes cluster locally.

After having set up Kubernetes, we can start deploying OpenFaaS to the cluster. It’s incredibly easy using k3sup

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/ # <- this step might not be necessary

chmod +x k3sup

# applies openfaas namespaces, create user, applies helm chart

k3sup app install openfaas

After that, inspecting your cluster, you will find several running OpenFaaS pods.

You should be able to view the gateway UI via http://127.0.0.1:31112/ui/ where you log in using the credentials generated by k3sup. You can get the password via kubectl:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

To allow faas-cli (needs to be installed) to access your newly deployed services, make sure to

1) set OPENFAAS_URL via export OPENFAAS_URL=http://127.0.0.1:31112

2) log in via faas-cli login --password {YOUR_PASSWORD_HERE}

The following script logs you in automatically:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

That’s it – We’re all set and can start deploying Serverless functions. 🎉

Creating and Deploying an OpenFaaS function

OpenFaaS offers different means and interfaces for deploying functions to the platform. We may use the CLI, the gateway UI, or the provided REST API, which is documented via this Swagger yaml.

Templates

The most straightforward way for getting started is via OpenFaaS’ template engine. With the faas-cli, we can create new functions based on available templates existing for various programming languages and tools. To get a list of all existing templates, you should run faas-cli template pull first. Running faas-cli new --list now, you should see a list of templates similar to the following:

csharp csharp-armhf dockerfile dockerfile-armhf go go-armhf java12 java8 node node-arm64 node-armhf node12 php7 python python-armhf python3 python3-armhf ruby

More templates are available via the OpenFaaS Store, which you can examine by running faas-cli template store list. Neither of both sources currently contains a Kotlin template, which I would love to change. Therefore, a change request is awaiting feedback and will hopefully add some official Kotlin to OpenFaaS.

On the bright side, it is quite easy to add my Kotlin templates to the cli by executing faas-cli template pull https://github.com/s1monw1/openfaas-kotlin. You should find two additional Kotlin variants now via faas-cli new --list. Let’s create a classic Kotlin function using the kotlin template:

faas-cli new hello-readers --lang=kotlin

# function can be found in ./hello-readers

By convention, OpenFaaS only exposes a particular part of each template to the user. If you want to look into that further, check out the Kotlin template and see how it is structured. The function is the one we have to deal with after running the faas-cli new command mentioned above.

Modify the template

In the newly generated function hello-readers, we can find a source file Handler.kt, which is the spot where we can implement the HTTP handling for our function. It’s a simple request-response mapper, and to demo it, we simply change the default body to "Hello kotlinexpertise readers".

class Handler : IHandler {

override fun handle(request: IRequest): IResponse {

return Response(body = "Hello kotlinexpertise readers")

}

}

Build and Deploy

Build the function

Next to the hello-readers folder, OpenFaaS should have generated a YAML file called hello-readers.yml. It contains the information the cli needs to build and deploy our function. You can learn more about the relevant YAML structure and how you may want to modify it here. Let’s build it.

# by default, OpenFaaS looks for a stack.yml file which can be adjusted using the -f flag

faas-cli build -f hello-readers.yml

OpenFaaS now runs the Dockerfile contained in the template (not visible to the user) to build the image which we can then deploy. There’s also a faas-cli up command available that wraps the build, push and deploy commands for our convenience. Either way, whether you use faas-cli up or faas-cli deploy, the result looks similar to the following output.

A successful deployment

> faas-cli deploy -f hello-readers.yml

Deploying: hello-readers.

WARNING! Communication is not secure, please consider using HTTPS. Letsencrypt.org offers free SSL/TLS certificates.

Deployed. 202 Accepted.

URL: http://127.0.0.1:31112/function/hello-readers

Clicking that URL reveals the message we previously configured: “Hello kotlinexpertise readers”. You can also examine the deployed function via the gateway UI.

Why would I use Kotlin to develop Serverless?

In the previous section, we saw that we could choose from a wide variety of programming languages to write Serverless functions for OpenFaaS. What language works best for you and makes the most sense depends on the use case and what you’re trying to achieve. Common Serverless languages are, e.g., Node, Python, and Go, but you’ll also find C#, Java, and even more in use.

Having a limited set of programming languages in a company probably leads to better internal tooling and also mitigates the issue of not being able to switch teams easily due to language barriers. I’m not a big fan of having hundreds of services all written in different languages, which most like would become problematic at some point. Most bigger tech companies limit their language portfolio and only add new ones if circumstances demand it.

I personally have been using Kotlin for almost four years now, and it was clear that I would also use it to explore the Serverless space. We at bryter have started using Kotlin Serverless functions not too long ago, and it’s working out well so far. Most of our backend services run Kotlin, and it’s the language we’re most experienced with. Nevertheless, we know that it might become necessary to add more languages, e.g., for performance reasons. The JVM footprint cannot be ignored and might impact performance here and there, for instance, when it comes to cold starts. To tweak things a bit, Graal VM could be a good option.

In a previous post, I wrote about the Server as a Function toolkit http4k, which is a fantastic library to enable Serverless functions in Kotlin. Http4k backs the functions we use at bryter, and I also proposed a corresponding template for the OpenFaaS store.

Serverless tooling for Kotlin

A pretty new framework named kotless (Kotlin Serverless) is currently being developed by Jetbrains with the goal of making it easy to turn Kotlin applications into ready-to-deploy functions and even handle the deployment which currently only works with AWS Lambda. The framework has a few very interesting features and I’m looking forward to see where kotless is heading.

Summary and Lookout