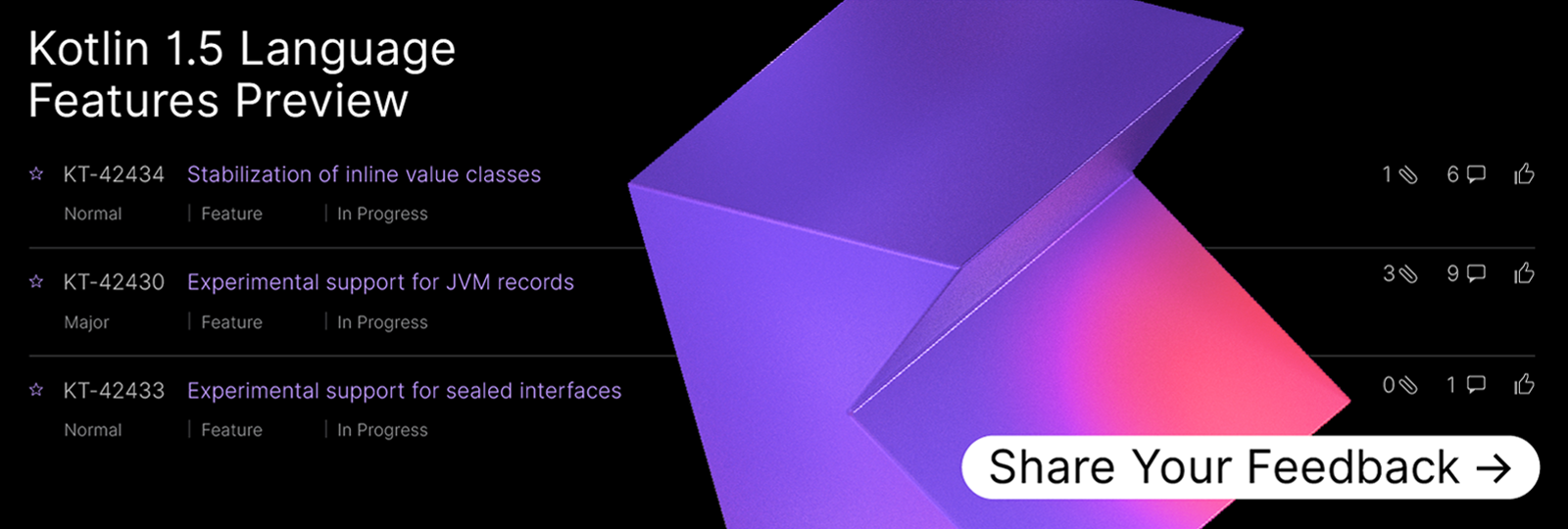

We’re planning to add new language features in Kotlin 1.5, and you can already try them out in Kotlin 1.4.30:

To try these new features, you need to specify the 1.5 language version.

The new release cadence means that Kotlin 1.5 is going to be released in a few months, but new features are already available for preview in 1.4.30. Your early feedback is crucial to us, so please give these new features a try now!

Stabilization of inline value classes

Inline classes have been available in Alpha since Kotlin 1.3, and in 1.4.30 they are promoted to Beta.

Kotlin 1.5 stabilizes the concept of inline classes but makes it a part of a more general feature, value classes, which we will describe later in this post.

We’ll begin with a refresher on how inline classes work. If you are already familiar with inline classes, you can skip this section and go directly to the new changes.

As a quick reminder, an inline class eliminates a wrapper around a value:

inline class Color(val rgb: Int)

An inline class can be a wrapper both for a primitive type and for any reference type, like String.

The compiler replaces inline class instances (in our example, the Color instance) with the underlying type (Int) in the bytecode, when possible:

fun changeBackground(color: Color)

val blue = Color(255)

changeBackground(blue)

Under the hood, the compiler generates the changeBackground function with a mangled name taking Int as a parameter, and it passes the 255 constant directly without creating a wrapper at the call site:

fun changeBackground-euwHqFQ(color: Int)

changeBackground-euwHqFQ(255) // no extra object is allocated!

The name is mangled to allow the seamless overload of functions taking instances of different inline classes and to prevent accidental invocations from the Java code that could violate the internal constraints of an inline class. Read below to find out how to make it usable from Java.

The wrapper is not always eliminated in the bytecode. This happens only when possible, and it works very similarly to built-in primitive types. When you define a variable of the Color type or pass it directly into a function, it gets replaced with the underlying value:

val color = Color(0) // primitive

changeBackground(color) // primitive

In this example, the color variable has the type Color during compilation, but it’s replaced with Int in the bytecode.

If you store it in a collection or pass it to a generic function, however, it gets boxed into a regular object of the Color type:

genericFunc(color) // boxed

val list = listOf(color) // boxed

val first = list.first() // unboxed back to primitive

Boxing and unboxing is done automatically by the compiler. You don’t need to do anything about it, but it’s useful to understand the internals.

Changing JVM name for Java calls

Starting from 1.4.30, you can change the JVM name of a function taking an inline class as a parameter to make it usable from Java. By default, such names are mangled to prevent accidental usages from Java or conflicting overloads (like changeBackground-euwHqFQ in the example above).

If you annotate a function with @JvmName, it changes the name of this function in the bytecode and makes it possible to call it from Java and pass a value directly:

// Kotlin declarations

inline class Timeout(val millis: Long)

val Int.millis get() = Timeout(this.toLong())

val Int.seconds get() = Timeout(this * 1000L)

@JvmName("greetAfterTimeoutMillis")

fun greetAfterTimeout(timeout: Timeout)

// Kotlin usage

greetAfterTimeout(2.seconds)

// Java usage

greetAfterTimeoutMillis(2000);

As always with a function annotated with @JvmName, from Kotlin you call it by its Kotlin name. Kotlin usage is type-safe, since you can only pass a value of the Timeout type as an argument, and the units are obvious from the usage.

From Java you can pass a long value directly. It’s no longer type-safe, and that’s why it doesn’t work by default. If you see greetAfterTimeout(2) in the code, it’s not immediately obvious whether it’s 2 seconds, 2 milliseconds, or 2 years.

By providing the annotation you explicitly emphasize that you intend this function to be called from Java. A descriptive name helps avoid confusion: adding the “Millis” suffix to the JVM name makes the units clear for Java users.

Init blocks

Another improvement for inline classes in 1.4.30 is that you now can define initialization logic in the init block:

inline class Name(val s: String) {

init {

require(s.isNotEmpty())

}

}

This was previously forbidden.

You can read more details about inline classes in the corresponding KEEP, in the documentation, and in the discussion under this issue.

Inline value classes

Kotlin 1.5 stabilizes the concept of inline classes and makes it a part of a more general feature: value classes.

Until now, “inline” classes constituted a separate language feature, but they are now becoming a specific JVM optimization for a value class with one parameter. Value classes represent a more general concept and will support different optimizations: inline classes now, and Valhalla primitive classes in the future when project Valhalla becomes available (more about this below).

The only thing that changes for you at the moment is syntax. Since an inline class is an optimized value class, you have to declare it differently than you used to:

@JvmInline

value class Color(val rgb: Int)

You define a value class with one constructor parameter and annotate it with @JvmInline. We expect everyone to use this new syntax starting from Kotlin 1.5. The old syntax inline class will continue to work for some time. It will be deprecated with a warning in 1.5 that will include an option to migrate all your declarations automatically. It will later be deprecated with an error.

Value classes

A value class represents an immutable entity with data. At the moment, a value class can contain only one property to support the use-case of “old” inline classes.

In future Kotlin versions with full support for this feature, it will be possible to define value classes with many properties. All the values should be read-only vals:

value class Point(val x: Int, val y: Int)

Value classes have no identity: they are completely defined by the data stored and === identity checks aren’t allowed for them. The == equality check automatically compares the underlying data.

This “identityless” quality of value classes allows significant future optimizations: project Valhalla’s arrival to the JVM will allow value classes to be implemented as JVM primitive classes under the hood.

The immutability constraint, and therefore the possibility of Valhalla optimizations, makes value classes different from data classes.

Future Valhalla optimization

Project Valhalla introduces a new concept to Java and the JVM: primitive classes.

The main goal of primitive classes is to combine performant primitives with the object-oriented benefits of regular JVM classes. Primitive classes are data holders whose instances can be stored in variables, on the computation stack, and operated on directly, without headers and pointers. In this regard, they are similar to primitive values like int, long, etc. (in Kotlin, you don’t work with primitive types directly but the compiler generates them under the hood).

An important advantage of primitive classes is that they allow the flat and dense layout of objects in memory. Currently, Array<Point> is an array of references. With Valhalla support, when defining Point as a primitive class (in Java terminology) or as a value class with the underlying optimization (in Kotlin terminology), the JVM can optimize it and store an array of Points in a “flat” layout, as an array of many xs and ys directly, not as an array of references.

We’re really looking forward to the upcoming JVM changes and we want Kotlin to benefit from them. At the same time, we don’t want to force our community to depend on new JVM versions to use value classes, and so we are going to support it for earlier JVM versions as well. When compiling the code to the JVM with Valhalla support, the latest JVM optimizations will work for value classes.

Mutating methods

There’s much more to say regarding the functionality of value classes. Since value classes represent “immutable” data, mutating methods, like those in Swift, are possible for them. A mutating method is when a member function or property setter returns a new instance rather than updating an existing one, and the main benefit is that you use them with a familiar syntax. This still needs to be prototyped in the language.

More details

@JvmInline annotation is JVM-specific. On other backends value classes can be implemented differently. For instance, as Swift structs in Kotlin/Native.

You can read the details about value classes in the Design Note for Kotlin value classes, or watch an extract from Roman Elizarov’s “A look into the future” talk.

Support for JVM records

Another upcoming improvement in the JVM ecosystem is Java records. They are analogous to Kotlin data classes and are mainly simple holders of data.

Java records don’t follow the JavaBeans convention, and they have ‘x()’ and ‘y()’ methods instead of the familiar ‘getX()’ and ‘getY()’.

Interoperability with Java always was and remains a priority for Kotlin. Thus, Kotlin code “understands” new Java records and sees them as classes with Kotlin properties. This works like it does for regular Java classes following the JavaBeans convention:

// Java

record Point(int x, int y) { }

// Kotlin

fun foo(point: Point) {

point.x // seen as property

point.x() // also works

}

Mainly for interoperability reasons, you can annotate your data class with @JvmRecord to have new JVM record methods generated:

@JvmRecord

data class Point(val x: Int, val y: Int)

The @JvmRecord annotation makes the compiler generate x() and y() methods instead of the standard getX() and getX() methods. We assume that you only need to use this annotation to preserve the API of the class when converting it from Java to Kotlin. In all the other use cases, Kotlin’s familiar data classes can be used instead without issue.

This annotation is only available if you compile Kotlin code to version 15+ of the JVM version. You can read more about this feature in the corresponding KEEP or in the documentation, as well as in the discussion in this issue.

Sealed interfaces and sealed classes improvements

When you make a class sealed, it restricts the hierarchy to defined subclasses, which allows exhaustive checks in when branches. In Kotlin 1.4, the sealed class hierarchy comes with two constraints. First, the top class can’t be a sealed interface, it should be a class. Second, all the subclasses should be located in the same file.

Kotlin 1.5 removes both constraints: you can now make an interface sealed. The subclasses (both to sealed classes and sealed interfaces) should be located in the same compilation unit and in the same package as the super class, but they can now be located in different files.

sealed interface Expr

data class Const(val number: Double) : Expr

data class Sum(val e1: Expr, val e2: Expr) : Expr

object NotANumber : Expr

fun eval(expr: Expr): Double = when(expr) {

is Const -> expr.number

is Sum -> eval(expr.e1) + eval(expr.e2)

NotANumber -> Double.NaN

}

Sealed classes, and now interfaces, are useful for defining abstract data type (ADT) hierarchies.

Another important use case that can now be nicely addressed with sealed interfaces is closing an interface for inheritance outside the library. Defining an interface as sealed restricts its implementation to the same compilation unit and the same package, which in the case of a library, makes it impossible to implement outside of the library.

For example, the Job interface from the kotlinx.coroutines package is only intended to be implemented inside the kotlinx.coroutines library. Making it sealed makes this intention explicit:

package kotlinx.coroutines

sealed interface Job { ... }

As a user of the library, you are no longer allowed to define your own subclass of Job. This was always “implied”, but with sealed interfaces, the compiler can formally forbid that.

Using JVM support in the future

Preview support for sealed classes has been introduced in Java 15 and on the JVM. In the future, we’re going to use the natural JVM support for sealed classes if you compile Kotlin code to the latest JVM (most likely JVM 17 or later when this feature becomes stable).

In Java, you explicitly list all the subclasses of the given sealed class or interface:

// Java

public sealed interface Expression

permits Const, Sum, NotANumber { ... }

This information is stored in the class file using the new PermittedSubclasses attribute. The JVM recognizes sealed classes at runtime and prevents their extension by unauthorized subclasses.

In the future, when you compile Kotlin to the latest JVM, this new JVM support for sealed classes will be used. Under the hood, the compiler will generate a permitted subclasses list in the bytecode to ensure there is JVM support and additional runtime checks.

// for JVM 17 or later

Expr::class.java.permittedSubclasses // [Const, Sum, NotANumber]

In Kotlin, you don’t need to specify the subclasses list! The compiler will generate the list based on the declared subclasses in the same package.

The ability to explicitly specify the subclasses for a super class or interface might be added later as an optional specification. At the moment we suspect it won’t be necessary, but we’ll be happy to hear about your use cases, and whether you need this functionality!

Note that for older JVM versions it’s theoretically possible to define a Java subclass to the Kotlin sealed interface, but don’t do it! Since JVM support for permitted subclasses is not yet available, this constraint is enforced only by the Kotlin compiler. We’ll add IDE warnings to prevent doing this accidentally. In the future, the new mechanism will be used for the latest JVM versions to ensure there are no “unauthorized” subclasses from Java.

You can read more about sealed interfaces and the loosened sealed classes restrictions in the corresponding KEEP or in the documentation, and see discussion in this issue.

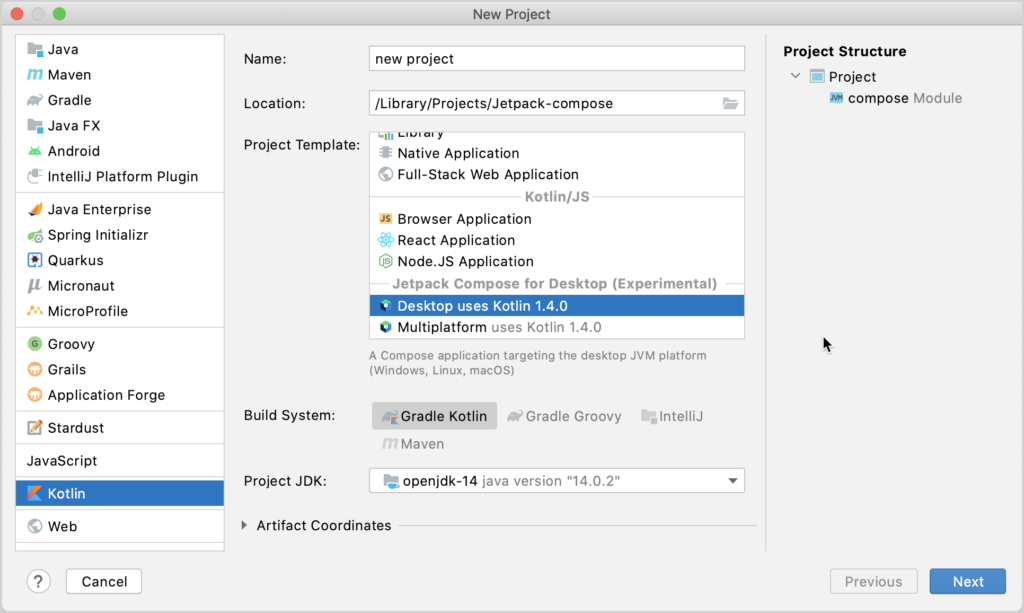

How to try the new features

You need to use Kotlin 1.4.30. Specify language version 1.5 to enable the new features:

compileKotlin {

kotlinOptions {

languageVersion = "1.5"

apiVersion = "1.5"

}

}

To try JVM records, you additionally need to use jvmTarget 15 and enable JVM preview features: add the compiler options -language-version 1.5 and -Xjvm-enable-preview.

Pre-release notes

Note that support for the new features is experimental and the 1.5 language version support is in the pre-release status. Setting the language version to 1.5 in the Kotlin 1.4.30 compiler is equivalent to trying the 1.5-M0 preview. The backward compatibility guarantees do not cover pre-release versions. The features and the API may change in subsequent releases. When we reach a final Kotlin 1.5-RC, all binaries produced by pre-release versions will be outlawed by the compiler, and you will be required to recompile everything that was compiled by 1.5‑Mx.

Share your feedback

Please try the new features described in this post and share your feedback! You can find further details in KEEPs and take part in the discussions in YouTrack, and you can report new issues if something doesn’t work for you. Please share your findings regarding how well the new features address use cases in your projects!

Further reading and discussion: