Kotlin 1.5.0-RC is available with all the features planned for 1.5.0 – check out the entire scope of the upcoming release! New language features, stdlib updates, an improved testing library, and many more changes are receiving a final polish. The only additional changes before the release will be fixes.

Try the modern Kotlin APIs on your real-life projects with 1.5.0-RC and help us make the release version better! Report any issues you find to our issue tracker, YouTrack.

Install 1.5.0-RC

In this post, we’ll walk you through the changes to the Kotlin standard and test libraries in 1.5.0-RC:

You can find all the details below!

Stable unsigned integer types

The standard library includes the unsigned integer API that comes in useful for dealing with non-negative integer operations. It includes:

- Unsigned number types:

UInt, ULong, UByte, UShort, and related functions, such as conversions.

- Aggregate types: arrays, ranges, and progressions of unsigned integers:

UIntArray, UIntRange, and similar containers for other types.

Unsigned integer types have been available in Beta since Kotlin 1.3. Now we are classifying the unsigned integer types and operations as stable, making them available without opt-in and safe to use in real-life projects.

Namely, the new stable APIs are:

- Unsigned integer types

- Ranges and progressions of unsigned integer types

- Functions that operate with unsigned integer types

fun main() {

//sampleStart

val zero = 0U // Define unsigned numbers with literal suffixes

val ten = 10.toUInt() // or by converting non-negative signed numbers

//val minusOne: UInt = -1U // Error: unary minus is not defined

val range: UIntRange = zero..ten // Separate types for ranges and progressions

for (i in range) print(i)

println()

println("UInt covers the range from ${UInt.MIN_VALUE} to ${UInt.MAX_VALUE}") // UInt covers the range from 0 to 4294967295

//sampleEnd

}

Arrays of unsigned integers remain in Beta. So do unsigned integer varargs that are backed by arrays. If you want to use them in your code, you can opt-in with the @ExperimentalUnsignedTypes annotation.

Learn more about unsigned integers in Kotlin.

Extensions for java.nio.file.Path API

Kotlin now provides a way to use the modern non-blocking Java IO in a Kotlin-idiomatic style out of the box via the extension functions for java.nio.file.Path.

Here is a small example:

import kotlin.io.path.*

import java.nio.file.Path

fun main() {

// construct path with the div (/) operator

val baseDir = Path("/base")

val subDir = baseDir / "subdirectory"

// list files in a directory

val kotlinFiles = Path("/home/user").listDirectoryEntries("*.kt")

// count lines in all kotlin files

val totalLines = kotlinFiles.sumOf { file -> file.useLines { lines -> lines.count() } }

}

These extensions were introduced as an experimental feature in Kotlin 1.4.20, and are now available without an opt-in. Check out the kotlin.io.path package for the list of functions that you can use.

The existing extensions for File API remain available, so you are free to choose the API you like best.

Locale-agnostic API for uppercase and lowercase

Many of you are familiar with the stdlib functions for changing the case of strings and characters: toUpperCase(), toLowerCase(), toTitleCase(). They generally work fine, but they can cause a headache when it comes to dealing with different platform locales – they are all locale-sensitive, which means their result can differ depending on the locale. For example, what does ”Kotlin”.toUpperCase() return? “Obviously KOTLIN”, you would say. But in the Turkish locale, the capital i is İ, so the result is different: KOTLİN.

Now there is a new locale-agnostic API for changing the case of strings and characters: uppercase(), lowercase(), titlecase() extensions, and their *Char() counterparts. You may have already tried its preview in 1.4.30.

The new functions work the same way regardless of the platform locale settings. Just call these functions and leave the rest to the stdlib.

The new functions work the same way regardless of the platform locale settings. Just call these functions and leave the rest to the stdlib.

fun main() {

//sampleStart

// replace the old API

println("Kotlin".toUpperCase()) // KOTLIN or KOTLİN or?..

// with the new API

println("Kotlin".uppercase()) // Always KOTLIN

//sampleEnd

}

On the JVM, you can perform locale-sensitive case change by calling the new functions with the current locale as an argument:

"Kotlin".uppercase(Locale.getDefault()) // Locale-sensitive uppercasing

The new functions will completely replace the old ones, which we’re deprecating now.

Clear Char-to-code and Char-to-digit conversions

The operation for getting a UTF-16 code of a character – the toInt() function – was a common pitfall because it looks pretty similar to String.toInt() on one-digit strings that produces an Int presented by this digit.

"4".toInt() // returns 4

'4'.toInt() // returns 52

Additionally, there was no common function that would return the numeric value 4 for Char '4'.

To solve these issues, there is now a set of new functions for conversion between characters and their integer codes and numeric values:

-

Char(code) and Char.code convert between a char and its code.

-

Char.digitToInt(radix: Int) and its *OrNull version create an integer from a digit in the specified radix.

-

Int.digitToChar(radix: Int) creates a char from a digit that represents an integer in the specified radix.

These functions have clear names and make the code more readable:

fun main() {

//sampleStart

val capsK = Char(75) // ‘K’

val one = '1'.digitToInt(10) // 1

val digitC = 12.digitToChar(16) // hexadecimal digit ‘C’

println("${capsK}otlin ${one}.5.0-R${digitC}") // “Kotlin 1.5.0-RC”

println(capsK.code) // 75

//sampleEnd

}

The new functions have been available since Kotlin 1.4.30 in the preview mode and are now stable. The old functions for char-to-number conversion (Char.toInt() and similar functions for other numeric types) and number-to-char conversion (Long.toChar() and similar except for Int.toChar()) are now deprecated.

Extended multiplatform char API

We’re continuing to extend the multiplatform part of the standard library to provide all of its capabilities to the multiplatform project common code.

Now we’ve made a number of Char functions available on all platforms and in common code. These functions are:

-

Char.isDigit(), Char.isLetter(), Char.isLetterOrDigit() that check if a char is a letter or a digit.

-

Char.isLowerCase(), Char.isUpperCase(), Char.isTitleCase() that check the case of a char.

-

Char.isDefined() that checks whether a char has a Unicode general category other than Cn (undefined).

-

Char.isISOControl() that checks whether a char is an ISO control character, that has a code in the ranges u0000..u001F or u007F..u009F.

The property Char.category and its return type enum class CharCategory, which indicates a character’s general category according to Unicode, are now available in multiplatform projects.

fun main() {

//sampleStart

val array = "Kotlin 1.5.0-RC".toCharArray()

val (letterOrDigit, punctuation) = array.partition { it.isLetterOrDigit() }

val (upperCase, notUpperCase ) = array.partition { it.isUpperCase() }

println("$letterOrDigit, $punctuation") // [K, o, t, l, i, n, 1, 5, 0, R, C], [ , ., ., -]

println("$upperCase, $notUpperCase") // [K, R, C], [o, t, l, i, n, , 1, ., 5, ., 0, -]

if (array[0].isDefined()) println(array[0].category)

//sampleEnd

}

Strict versions of String?.toBoolean()

Kotlin’s String?.toBoolean() function is widely used for creating boolean values from strings. It works pretty simply: it’s true on a string “true” regardless of its case and false on all other strings, including null.

While this behavior seems natural, it can hide potentially erroneous situations. Whatever you convert with this function, you get a boolean even if the string has some unexpected value.

New case-sensitive strict versions of the String?.toBoolean() are here to help avoid such mistakes:

-

String.toBooleanStrict() throws an exception for all inputs except literals “true” and “false”.

-

String.toBooleanStrictOrNull() returns null for all inputs except literals “true” and “false”.

fun main() {

//sampleStart

println("true".toBooleanStrict()) // True

// println("1".toBooleanStrict()) // Exception

println("1".toBooleanStrictOrNull()) // null

println("True".toBooleanStrictOrNull()) // null: the function is case-sensitive

//sampleEnd

}

Duration API changes

The experimental duration and time measurement API has been available in the stdlib since version 1.3.50. It offers an API for the precise measurement of time intervals.

One of the key classes of this API is Duration. It represents the amount of time between two time instants. In 1.5.0, Duration receives significant changes both in the API and internal representation.

Duration now uses a Long value for the internal representation instead of Double. The range of Long values enables representing more than a hundred years with nanosecond precision or a hundred million years with millisecond precision. However, the previously supported sub-nanosecond durations are no longer available.

We are also introducing new properties for retrieving a duration as a Long value. They are available for various time units: Duration.inWholeMinutes, Duration.inWholeSeconds, and others. These functions come to replace the Double-based properties such as Duration.inMinutes.

Another change is a set of new factory functions for creating Duration instances from integer values. They are defined directly in the Duration type and replace the old extension properties of numeric types such as Int.seconds.

@ExperimentalTime

fun main() {

//sampleStart

val duration = Duration.milliseconds(120000)

println("There are ${duration.inWholeSeconds} seconds in ${duration.inWholeMinutes} minutes")

//sampleEnd

}

Given such major changes, the whole duration and time measurement API remains experimental in 1.5.0 and requires an opt-in with the @ExperimentalTime annotation.

Please try the new version and share your feedback in our issue tracker, YouTrack.

Math operations: floored division and the mod operator

In Kotlin, the division operator (/) on integers represents the truncated division, which drops the fractional part of the result. In modular arithmetics, there is also an alternative – floored division that rounds the result down (towards the lesser integer), which produces a different result on negative numbers.

Previously, floored division required a custom function like:

fun floorDivision(i: Int, j: Int): Int {

var result = i / j

if (i != 0 && result <= 0) result--

return result

}

In 1.5.0-RC, we present the floorDiv() function that performs floored division on integers.

fun main() {

//sampleStart

println("Truncated division -5/3: ${-5 / 3}")

println("Floored division -5/3: ${-5.floorDiv(3)}")

//sampleEnd

}

In 1.5.0, we’re introducing the new mod() function. It now works exactly as its name suggests – it returns the modulus that is the remainder of the floored division.

It differs from Kotlin’s rem() (or % operator). Modulus is the difference between a and a.floorDiv(b) * b. Non-zero modulus always has the same sign as b while a % b can have a different one. This can be useful, for example, when implementing cyclic lists:

fun main() {

//sampleStart

fun getNextIndexCyclic(current: Int, size: Int ) = (current + 1).mod(size)

fun getPreviousIndexCyclic(current: Int, size: Int ) = (current - 1).mod(size)

// unlike %, mod() produces the expected non-negative value even if (current - 1) is less than 0

val size = 5

for (i in 0..(size * 2)) print(getNextIndexCyclic(i, size))

println()

for (i in 0..(size * 2)) print(getPreviousIndexCyclic(i, size))

//sampleEnd

}

Collections: firstNotNullOf() and firstNotNullOfOrNull()

Kotlin collections API covers a range of popular operations on collections with built-in functions. For cases that aren’t common, you usually combine calls of these functions. It works, but this doesn’t always look very elegant and can cause overhead.

For example, to get the first non-null result of a selector function on the collection elements, you could call mapNotNull() and first(). In 1.5.0, you can do this in a single call of a new function firstNotNullOf(). Together with firstNotNullOf(), we’re adding its *orNull() counterpart that produces null if there is no value to return.

Here is an example of how it can shorten your code.

Assume that you have a class with a nullable property and you need its first non-null value from a list of the class instances.

class Item(val name: String?)

You can implement this by iterating the collection and checking if a property in not null:

// Option 1: manual implementation

for (element in collection) {

val itemName = element.name

if (itemName != null) return itemName

}

return null

Another way is to use the previously existing functions mapNotNull() and firstOrNull(). Note that mapNotNull() builds an intermediate collection, which requires additional memory, especially for big collections. And so, a transformation to a sequence may be also needed here.

// Option 2: old stdlib functions

return collection

// .asSequence() // Avoid creating intermediate list for big collections

.mapNotNull { it.name }

.firstOrNull()

And this is how it looks with the new function:

// Option 3: new firstNotNullOfOrNull()

return collection.firstNotNullOfOrNull { it.name }

Test library changes

We haven’t shipped major updates to the Kotlin test library kotlin-test for several releases, but now we’re providing some long-awaited changes. With 1.5.0-RC, you can try a number of new features:

- Single

kotlin-test dependency in multiplatform projects.

- Automatic choice of a testing framework for Kotlin/JVM source sets.

- Assertion function updates.

kotlin-test dependency in multiplatform projects

We’re continuing our development of the configuration process for multiplatform projects. In 1.5.0, we’ve made it easier to set up a dependency on kotlin-test for all source sets.

Now the kotlin-test dependency in the common test source set is the only one you need to add. The Gradle plugin will infer the corresponding platform dependency for other source sets:

-

kotlin-test-junit for JVM source sets. You can also switch to kotlin-test-junit-5 or kotlin-test-testng if you enable them explicitly (read on to learn how).

-

kotlin-test-js for Kotlin/JS source sets.

-

kotlin-test-common and kotlin-test-annotations-common for common source sets.

- No extra artifact for Kotlin/Native source sets because Kotlin/Native provides built-in implementations of the

kotlin-test API.

Automatic choice of a testing framework for Kotlin/JVM source sets

Once you specify the kotlin-test dependency in the common test source set as described above, the JVM source sets automatically receive the dependency on JUnit 4. That’s it! You can write and run tests right away!

This is how it looks in the Groovy DSL:

kotlin {

sourceSets {

commonTest {

dependencies {

// This brings the dependency

// on JUnit 4 transitively

implementation kotlin('test')

}

}

}

}

And in the Kotlin DSL it is:

kotlin {

sourceSets {

val commonTest by getting {

dependencies {

// This brings the dependency

// on JUnit 4 transitively

implementation(kotlin("test"))

}

}

}

}

You can also switch to JUnit 5 or TestNG by simply calling a function in the test task: useJUnitPlatform() or useTestNG().

kotlin {

jvm {

testRuns["test"].executionTask.configure {

// enable TestNG support

useTestNG()

// or

// enable JUnit Platform (a.k.a. JUnit 5) support

useJUnitPlatform()

}

}

}

The same works in JVM-only projects when you add the kotlin-test dependency.

Assertion functions updates

For 1.5.0, we’ve prepared a number of new assertion functions along with improvements to existing ones.

First, let’s take a quick look at the new functions:

-

assertIs<T>() and assertIsNot<T>() check the value’s type.

-

assertContentEquals() compares the container content for arrays, sequences, and any Iterable. More precisely, it checks whether expected and actual contain the same elements in the same order.

-

assertEquals() and assertNotEquals() for Double and Float have new overloads with a third parameter – precision.

-

assertContains() checks the presence of an item in any object with the contains() operator defined: array, list, range, and so on.

Here is a brief example that shows the usage of these functions:

@Test

fun test() {

val expectedArray = arrayOf(1, 2, 3)

val actualArray = Array(3) { it + 1 }

assertIs(actualArray[0])

assertContentEquals(expectedArray, actualArray)

assertContains(expectedArray, 2)

val x = sin(PI)

// precision parameter

val tolerance = 0.000001

assertEquals(0.0, x, tolerance)

}

Regarding the existing assertion functions – it’s now possible to call suspending functions inside the lambda passed to assertTrue(), assertFalse(), and expect() because these functions are now inline.

Try all the features of Kotlin 1.5.0

Bring all these modern Kotlin APIs to your real-life projects with 1.5.0-RC!

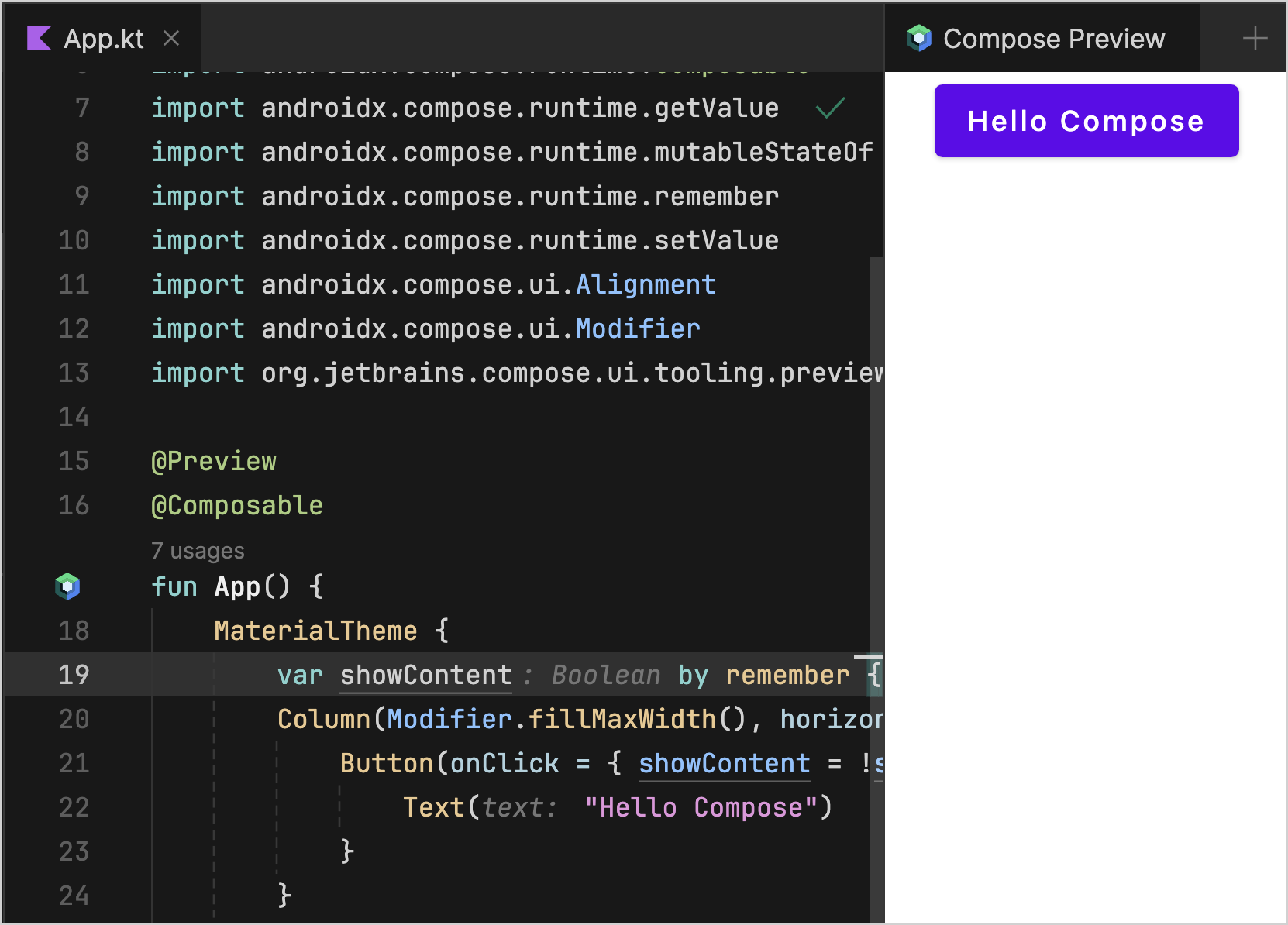

In IntelliJ IDEA or Android Studio, install the Kotlin plugin 1.5.0-RC. Learn how to get the EAP plugin versions.

Build your existing projects with 1.5.0-RC to check how they will work with 1.5.0. With the new simplified configuration for preview releases, you just need to change the Kotlin version to 1.5.0-RC and adjust the dependency versions, if necessary.

Install 1.5.0-RC

The latest version will be available online in the Kotlin Playground soon.

Compatibility

As with all feature releases, some deprecation cycles of previously announced changes are coming to an end with Kotlin 1.5.0. All of these cases were carefully reviewed by the language committee and are listed in the Compatibility Guide for Kotlin 1.5. You can also explore these changes on YouTrack.

Release candidate notes

Now that we’ve reached the release candidate for Kotlin 1.5.0, it is time for you to start compiling and publishing! Unlike previous milestone releases, binaries created with Kotlin 1.5.0-RC are guaranteed to be compatible with Kotlin 1.5.0.

Share feedback

This is the final opportunity for you to affect the next feature release! Share any issues you find with us in the issue tracker. Make Kotlin 1.5.0 better for you and the community!

Install 1.5.0-RC

Please don’t keep it in secret.

Please don’t keep it in secret.  :

: !

!